[AINews] SciCode: HumanEval gets a STEM PhD upgrade • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

OpenRouter (Alex Atallah) Discord

Discord Conversations on AI Development

Eureka Labs, Mistral Releases, and Educational Platforms

Issues and Discussions in Modular (Mojo 🔥)

Discussion on CUDA Modes

Jobs in CUDA Mode

Perplexity AI Pro Features and New Discoveries

Research Updates on Training Models, Extension Recommendations, and Data Sampling Strategies

Latent Space AI-in-Action Club Messages

Impact of Heat Wave, Super White Paint, Hackathon Event, and Code Model Discussions

OpenInterpreter Chat

Mozilla AI and tinygrad Updates

AI Twitter Recap

AI Twitter Recap

-

AI Model Developments

- Anthropic API updates: link noted Anthropic doubled the max output token limit for Claude 3.5 Sonnet from 4096 to 8192 in the Anthropic API, just add the header 'anthropic-beta': 'max-tokens-3-5-sonnet-2024-07-15' to API calls.

- Effective Claude Sonnet 3.5 Coding System Prompt: link shared an effective Claude Sonnet 3.5 Coding System Prompt with explanations of the guided chain-of-thought steps: Code Review, Planning, Output Security Review.

- Q-GaLore enables training 7B models on 16GB GPUs: link noted Q-GaLore incorporates low precision training with low-rank gradients and lazy layer-wise subspace exploration to enable training LLaMA-7B from scratch on a single 16GB NVIDIA RTX 4060 Ti, though it is mostly slower.

- Mosaic compiler generates efficient H100 code: link highlighted that the Mosaic compiler, originally for TPU, can generate very efficient H100 code, showing convergence of AI accelerators.

- Dolphin 2.9.3-Yi-1.5-34B-32k-GGUF model on Hugging Face: link gave kudos to @bartowski1182 and @cognitivecompai for the remarkable Yi fine-tune model on Hugging Face with over 111k downloads last month.

-

AI Model Performance and Benchmarking

- Llama 3 model performance: link compared ChatGPT (free) vs MLX LM with Gemma 2 9B on M2 Ultra, showing comparable performance. link noted Llama 3 0-shotting 90% on the MATH dataset.

- Synthetic data limitations: link argued synthetic data is dumb and unlikely to result in better models, questioning the realism of synthetic instructions. link countered that synthetic data takes many forms and sweeping claims are unwise.

- Evaluating LLMs with LLMs: link highlighted the power of using LLMs to generate inputs and evaluate outputs of other LLMs, as in AlpacaEval, while cautioning about over-reliance on automatic evals.

- LLM-as-a-judge techniques: [link](https://twitter.com/cwolferesearch/status/16129499230104

AI Discord Recap

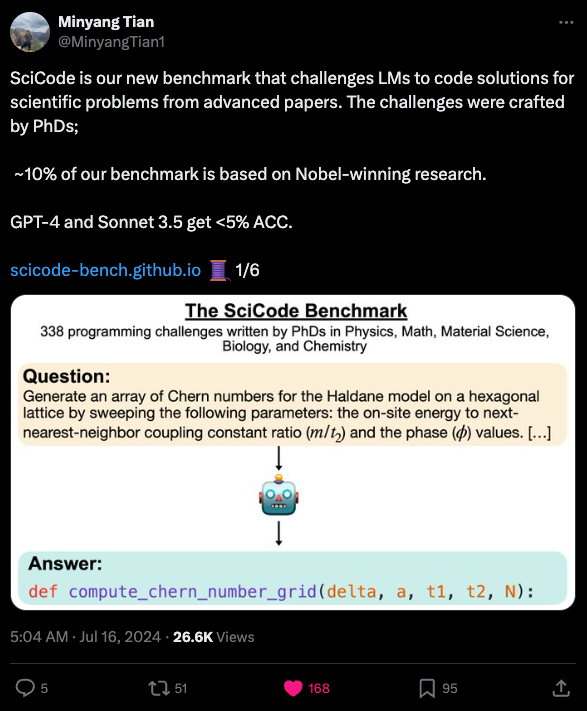

The AI Discord Recap section provides updates and discussions from various Discord channels related to AI developments. Features include new coding models like Codestral Mamba and Mathstral by Mistral AI, compact LLM models like SmolLM, and advancements in model efficiencies like Q-Sparse. Additionally, educational platforms like Eureka Labs and new benchmarks like SciCode are discussed. The section also highlights achievements in AI models such as NuminaMath and neural vocoders like BigVGAN v2. Interface upgrades by Hugging Face and techniques like stable diffusion for anime art are explored. The discussions cover a wide range of topics from hardware performance comparisons to error handling methods in different AI models.

OpenRouter (Alex Atallah) Discord

Generous Gesture by OpenRouter:

OpenRouter has graciously provided the community with free access to the Qwen 2 7B Instruct model, enhancing the arsenal of AI tools for eager engineers.

To tap into this offering, users can visit OpenRouter and engage with the model without a subscription.

The Gemini Debate: Free Tier Frustrations:

Contrasting views emerged on Google's Gemini 1.0 available through its free tier, with strong opinions stating it falls short of OpenAI's GPT-4o benchmark.

A member highlighted the overlooked promise in Gemini 1.5 Pro, citing it has creative prowess despite its coding quirks.

OpenRouter Oscillation: Connectivity Woes:

OpenRouter users faced erratic disruptions accessing the site and its API, sparking a series of concerns within the community.

Official statements attributed the sporadic outages to transient routing detours and potential issues with third-party services like Cloudflare.

Longing for Longer Contexts in Llama 3:

Engineers shared their plight over the 8k context window limitation in Llama 3-70B Instruct, pondering over superior alternatives.

Suggestions for models providing extended context abilities included Euryale and Magnum-72B, yet their consistency and cost factors were of concern.

Necessities and Nuances of OpenRouter Access:

Clarification spread amongst users regarding OpenRouter's model accessibility, noting not all models are free and some require a paid agreement.

Despite the confusion, OpenRouter does offer a selection of APIs and models free of cost, while hosting enterprise-grade dry-run models demands underscoring specific business contracts.

Discord Conversations on AI Development

In the Unsloth AI Discord channel, discussions revolve around various AI-related topics such as the shutdown of a NVIDIA project, challenges in fine-tuning large models, Mistral AI's releases of Codestral Mamba and Mathstral, and the introduction of Unsloth Pro with multi-GPU support. Other channels cover the release of Apple's DCLM-Baseline-7B language model, debates on choosing between the RTX 4090 and multiple 3060s for AI tasks, and the launch of O1 Light hardware preorders. The community actively engages in sharing insights and opinions on emerging AI technologies and developments.

Eureka Labs, Mistral Releases, and Educational Platforms

Eureka Labs is focused on building AI-native educational platforms and courses. Their first product, LLM101n, is an undergraduate-level course for training AI. Mistral has released Mamba-Codestral-7B-v0.1 for code tasks and Mathstral-7B-v0.1 focusing on mathematical and scientific tasks. The coding model is not supported by Llama.cpp. Discussing Unsloth AI topics, including Mimicking Pretraining, Fine-Tuning LLMs, RunPod Training Issues, Multi-GPU Support, and Exporting Models. There were discussions on LLaMA-405B, Q-Sparse, ColPali, AgentInstruct, and Adam-mini models. The section covers Modular's discussions on FlatBuffers vs Protobuf, AMD logo color, Mojo GitHub search issues, open-source status of MAX SDK, and YouTube links to Mojo talks.

Issues and Discussions in Modular (Mojo 🔥)

Users in the Modular (Mojo 🔥) community raised concerns and discussed various topics including changes in partnership details with NVIDIA, issues faced during MAX Graph API Tutorial, problems with MAX Tensor and Graph imports, confusion with MAX installation and export paths, and requests for more reporting features in MAX. These discussions highlighted the importance of accurate imports, proper kernel settings, installation reliability, and detailed metrics for decision-making. Additionally, links to relevant resources like MAX Graph API tutorial and Forge Tools were shared.

Discussion on CUDA Modes

Several discussions were held in the CUDA Mode channel regarding GPU performance, PyTorch profiler export times, and the use of custom kernels. In one conversation, a member expressed concern about slow GPU performance on a laptop, and another member advised against running training runs of a reasonable size on a laptop. Another member questioned the normality of taking 30 minutes to export a trace using the PyTorch profiler, with suggestions that capturing a lot of information or using the profile_memory option might contribute to longer export times. Additionally, the use of nvfuser's custom fusion kernels was highlighted in another discussion, mentioning the project's usefulness despite concerns about long export times. Finally, the channel 'cool-links' introduced SCALE by Spectral Compute, a GPGPU programming toolkit that allows for native compilation of CUDA applications for AMD GPUs without modifications. Support for more GPU vendors and CUDA APIs is in development, with tutorials and examples available for users to get started.

Jobs in CUDA Mode

CUDA MODE ▷ #jobs (9 messages🔥):

-

Suno hiring ML engineers for real-time audio models: Suno is looking for ML engineers proficient in training and inference for real-time audio streaming to work on large models.

-

Suno looking for torch.compile and triton enthusiasts: Suno seeks expertise in torch.compile and triton for their projects, encouraging Cutlass experts to apply.

-

Suno offering ML internship roles: Suno is open to hiring interns for machine learning engineering roles.

Find more details and job postings by following the links provided.

Perplexity AI Pro Features and New Discoveries

The section discusses the new features introduced by Perplexity AI Pro, including image upload and smarter AI capabilities. It also highlights some new discoveries made, such as the discovery of an accessible lunar cave on the moon. The cave is of significant size and depth, providing potential protection from harsh lunar conditions and stable temperatures. This discovery could be valuable for future lunar exploration and habitation.

Research Updates on Training Models, Extension Recommendations, and Data Sampling Strategies

- Insights on Training Models: Efforts to improve model performance are highlighted through the classification and filtering of samples and execution feedback for code.

- FLAMe Outperforms GPT-4: DeepMind's FLAMe model surpasses GPT-4 and 4o in RewardBench by training on human evaluations, despite remaining closed.

- Bypass Paywalls Extension: Users recommend a bypass-paywalls extension for reliable article access.

- Computational Trends Highlighted: An arXiv paper delves into training models on large token datasets and settling on 7T tokens as more effective.

- Debates on Data Sampling Strategies: Members discuss various data sampling strategies, focusing on multiple responses vs. best/worst pairs.

Latent Space AI-in-Action Club Messages

This section discusses the creation of XState-powered LLM agents using XState and LangGraph. There is an upcoming comparison between LangGraph and XState for building LLM agents. A new approach using XState to create state-machine-powered LLM agents is in progress with plans to add more examples comparing LangGraph and XState. The discussion also includes the mention of a link to GitHub where state-machine-powered LLM agents can be created.

Impact of Heat Wave, Super White Paint, Hackathon Event, and Code Model Discussions

Members in this section debated the impact of the current heat wave and discussed the idea of painting roofs white to mitigate urban heat islands. They also shared information about a super white paint reflecting 98% of sunlight. Additionally, an upcoming hackathon event at Crusoe's SF office focusing on FP8 improvement was announced. The efficacy of Deepseek Coder models and Mistral AI's new models were compared, highlighting issues and preferences among members.

OpenInterpreter Chat

A member expressed challenges in integrating Open Interpreter with RayBan Stories due to the lack of an SDK from Meta. The community discussed potential barriers, such as glued components, and desired transparent models for easier exploration. The use of Google Glass as an alternative was suggested. Multiple members voiced frustration over delays in receiving O1 Light hardware preorders, with orders over 3 months old. Impatience grew due to the lack of updates on the preorder situation.

Mozilla AI and tinygrad Updates

tinygrad (George Hotz) ▷ #general (2 messages):

- Exploring Tinygrad's Intermediate Language: Users discuss the intermediate language of tinygrad and tips for debugging and visualization.

- Debugging and visualizing Tinygrad: Recommendations for running tinygrad with DEBUG=3 and using GRAPH=1 and GRAPHUOPS=1 for visuals.

Mozilla AI ▷ #announcements (1 message):

- Mike Bird presents Open Interpreter: Details about Mike Bird's presentation on Open Interpreter and an invitation for questions. Participants are encouraged to engage during the discussion.

FAQ

Q: What are some recent updates in AI Model Developments?

A: Recent updates in AI Model Developments include Anthropic doubling the max output token limit for Claude 3.5 Sonnet in their API, Q-GaLore enabling training 7B models on 16GB GPUs, the Mosaic compiler generating efficient H100 code, and the release of Dolphin 2.9.3-Yi-1.5-34B-32k-GGUF model on Hugging Face.

Q: What are some discussions around AI Model Performance and Benchmarking?

A: Discussions around AI Model Performance and Benchmarking include comparisons between models like ChatGPT and MLX LM, arguments about the limitations of synthetic data, the evaluation of language models using other language models, and techniques like LLM-as-a-judge for evaluating model outputs.

Q: What are some new features introduced by OpenRouter and their impact on the community?

A: OpenRouter has introduced the Qwen 2 7B Instruct model for free access to the community, enhancing the arsenal of AI tools for engineers. There have been contrasting views on Google's Gemini 1.0 and discussions about desired longer context windows in models like Llama 3.

Q: What are some ongoing discussions in the Modular (Mojo 🔥) community?

A: Ongoing discussions in the Modular (Mojo 🔥) community cover topics such as changes in partnership details with NVIDIA, issues faced during MAX Graph API Tutorial, problems with MAX Tensor and Graph imports, confusion with MAX installation, and requests for more reporting features in MAX.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!