[AINews] RouteLLM: RIP Martian? (Plus: AINews Structured Summaries update) • ButtondownTwitterTwitter

Chapters

AI Twitter Recap and AI Models and Architectures

AI Discord Recap

Advanced AI Insights

Interconnects and Discussions on Various AI Topics

Torchtune, AI Stack Devs, Mozilla AI, MLOps @Chipro, DiscoResearch

HuggingFace Channels Highlights

HuggingFace NLP Discussions and Diffusion

Quantization in LM Studio

Hardware Discussion in LM Studio

CUDA MODE Updates

Perplexity AI Sharing

Nous Research AI Discussions

Modular (Mojo 🔥)

LangChain AI & Community Update

OpenAccess AI Collective (axolotl) General Help

Eleuther General and Research Discussions

LLM Finetuning (Hamel + Dan) ▷ #ankurgoyal_textsql_llmevals

AI Community Discussions

Subscription Form and Social Networks

AI Twitter Recap and AI Models and Architectures

This section includes recaps done by Claude 3 Opus on AI Twitter activities. It also covers updates on AI models and architectures, such as the Gemma 2 model family's advancements and Block Transformer architecture improvements. These developments showcase the progress in AI technology and the continuous innovation in the field.

AI Discord Recap

A high-level summary of AI-related discussions on Discord platforms, highlighting technical advancements, model developments, and community engagements. The conversations cover various topics such as model training, optimization techniques, new AI models, open-source tools, challenges in AI applications, and real-world implementations. The discussions delve into specific models like Gemma 2, Claude 3.5, and Persona Hub, as well as issues related to GPU configurations, training mechanisms, and synthetic data generation. Community members share insights, troubleshooting tips, and feedback on tools like the AutoUpdater in LM Studio, RAM optimization for Unsloth AI, and tokenizer mechanics in LLMs.

Advanced AI Insights

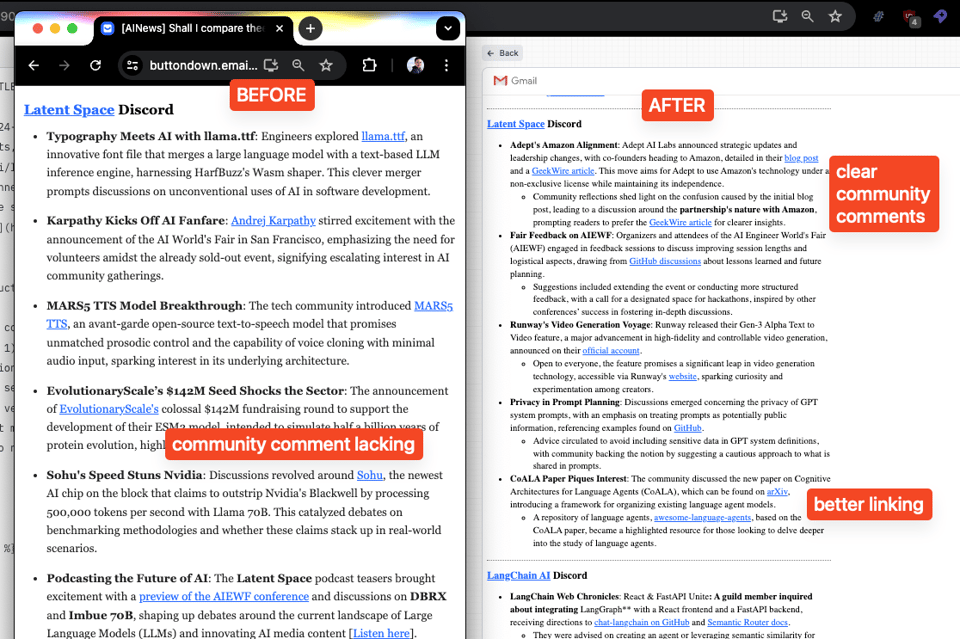

The discussions in this section delve into various advanced AI topics and developments. Highlights include Deepseek v2's performance on powerful CPUs, the trials of quantization quests, and the debut of the Sliding Window Attention feature in Gemma 2. Additionally, the Stability.ai Discord channel explores challenges in training Loras and hardware requirements for running Stable Diffusion. The CUDA MODE Discord section delves into tools for coordinating meetings across time zones and discussions on the log exp function's role in numeric stability. The Nous Research AI Discord focuses on breakthroughs such as a billion persona dataset and collaborations between Microsoft and OpenAI. Furthermore, the Latent Space Discord covers topics like Adept AI Labs' updates and the launch of Runway's Gen-3 Alpha Text to Video feature. The LangChain AI Discord showcases integration techniques like combining React and FastAPI for LangGraph and boosting retrieval speeds using Matryoshka RAG and llama_index. Overall, these sections offer insights into cutting-edge advancements, challenges, and innovations within the AI community.

Interconnects and Discussions on Various AI Topics

The section covers various discussions and updates on different AI-related topics within different Discord channels. From debates on AI agent development and innovative data collection methods to strategic moves by companies like Adept and Cognition AI. It also delves into the potential of synthetic data generation, model valuations, and advancements in reinforcement learning. Further discussions revolve around challenges with setting up AI tools, improving long-term memory capabilities in AI systems, and integrating vector search into public datasets. Overall, the section showcases a diverse range of conversations and developments shaping the AI community.

Torchtune, AI Stack Devs, Mozilla AI, MLOps @Chipro, DiscoResearch

Torchtune Discord

- Discussion on benefits of DPO training for datasets with robust data

- Success in fine-tuning Phi Mini model with WandBLogger adoption

- Technical talk on fine-tuning with WandB for clarity in training

AI Stack Devs (Yoko Li) Discord

- Featherless.ai offers subscription-based access to LLM models

- Featherless.ai considering TTS systems integration for NPC voice diversity

- New locations announced at Hexagen.World for virtual exploration

Mozilla AI Discord

- Facebook releases LLM Compiler model compiled by Mozilla into llamafiles

- Efforts to integrate llamafile officially on Huggingface to ease user experience

- Discussion on running llamafile on varied devices, with specific system requirements

- llamafile v0.8.9 now officially compatible with Android and Google Gemma2 architecture

MLOps @Chipro Discord

- Technical issues with accessing a recorded event on ML engineering to AI

- Data Talks Club's upcoming Zoomcamp focusing on open-source data pipelines

DiscoResearch Discord

- Issue faced in training BPE tokenizer on a 50GB corpus

- Engineer encounters OOM issue during training, seeks expert aid

HuggingFace Channels Highlights

HuggingFace Highlights

-

Huggingface Course Update Status: Regular maintenance of courses with updated content. Details on courses like Diffusion and Community computer vision courses are unclear. Users are advised to verify content directly from the Huggingface website.

-

Biometric Gait Recognition with 2D Video Cameras: Achieved 70% accuracy in identifying individuals. Next steps involve finding more datasets and training with triplet loss. Collaboration and knowledge sharing encouraged.

-

Custom ML Library for Garmin Devices: Project underway to clone Apple's double-tap feature on Garmin devices using a custom ML library in Monkey C. Collaborators and experts in Monkey C invited to join.

-

Understanding Tree-Sitter S-Expressions: Exploring Tree-sitter s-expressions with resources like official documentation. Supports various languages through C APIs and higher-level bindings.

-

Engaging Explainers of ML Concepts: Importance of clear explanations in learning ML concepts highlighted.

-

Kickstarter Project Fuses Anime and AI: Project focuses on creating anime and manga artwork with AI.

-

Firecrawl Turns Websites into Data: Open-source tool that converts websites into clean markdown or structured data, celebrated for achieving 7000+ stars on GitHub.

-

Lora Model for SDXL Unveiled on HuggingFace: Features unique creature prompts. Users praise the model and mention related models like alvdansen/BandW-Manga.

-

Langchain Integrates with Postgres: Article discussing the integration of Langchain with Postgres.

-

AI Explores Mesh Generation Techniques: Video and demo on advancements in AI for mesh generation, supported by code and research.

HuggingFace NLP Discussions and Diffusion

- Urgent Help Needed with GEC Prediction List: A member sought immediate advice regarding the shape of their GEC prediction list and received community assistance.

- Top 10 Deep Learning Algorithms Video: A user shared a YouTube video providing brief explanations for each algorithm, but faced a warning for cross-posting.

- Seeking Hands-on LLM Learning Methods: A member inquired about effective hands-on learning methods for LLMs.

- Incorporating RBF Layer in Transformer Model: A user asked about replacing a layer in a transformer model with an RBF layer.

- Link Mentioned: Top 10 Deep Learning Algorithms intro in 1 min

Quantization in LM Studio

Users engaged in discussions around balancing model size with performance through various experimental quantizations like q5_k_l, IQ4_XS, and others in LM Studio. Reports highlighted the importance of maintaining output quality while reducing memory footprint. Despite mixed feedback, the community aims to refine these quantizations for broader adoption and consistent performance enhancements. Issues with certain quantized models were noted, prompting users to explore alternative quant models like bartowski's quantizations that worked without significant problems.

Hardware Discussion in LM Studio

Mix smaller models with lower hardware needs: A user suggested that combining 0.5b models could make them highly usable on 4GB computers, potentially bridging the hardware gap. Discussions highlighted CPU and GPU speed differences, with Intel and IPEX's llama.cpp fork being highlighted for better GPU performance.

Server Power Use: It's Electrifying! Users shared detailed stats on power usage for various LLM models and setups, including a server with dual Xeon E5 CPUs and Tesla P40 GPUs drawing up to 858 watts. Another user compared their own server setup, noting Nvidia-smi reported figures around 70W & 180W, sparking a discussion on efficient monitoring and hardware optimization.

4090 vs Dual 4080s: GPU Showdown A user queried the community about the performance trade-offs between a single 4090 and dual 4080 GPUs, receiving advice that a single 4090 often performs better due to model speed suffering when split over two cards. Further discussions suggested that for large models like 70b, a single 24GB GPU is preferable to dual 16GB setups, reinforcing the single 4090 recommendation.

Trading Setup: Mac vs PC A user considered the benefits of trading software on a Mac Studio compared to a PC setup, highlighting hardware flexibility as crucial for AI workloads. Another suggested the development of trading software for Mac to make the switch more feasible.

CUDA MODE Updates

This section discusses various updates related to CUDA MODE in the Discord channel. It includes discussions on resolving kernel launch bottleneck by using FP16, struggles with bitpacking optimization for large tensors, introducing a new FlexAttention API PR for PyTorch, identifying good first issues for CUDA contributors, and benchmarks for Nscale AMD MI300X GPUs with GEMM tuning. Additionally, there are discussions on book stability issues, curiosity about PMPP editions, and contributions related to torchao and off-topic discussions.

Perplexity AI Sharing

- Minecraft Repair Mechanics Misleads Kids: Discusses the criticism received by Minecraft Repair Mechanics for potentially misguiding children about real-world tool repair, with community concerns and reflections on educational aspects.

- Impact of Global Warming on Smartphones: Highlights discussions on the increasing impact of global warming on smartphone charging performance, along with shared experiences, concerns, and debates on solutions for resilient battery technologies.

- Starting Perplexity: A Tale: Shares the story behind starting Perplexity, including reflections on the startup journey, insights, and opinions from members about the challenges faced and perseverance required.

Nous Research AI Discussions

Self-Retrieval Paper Dissection:

- Discussion on the self-retrieval paper expressing concerns about potential repetition during training and model overfitting.

- Members expressing frustration and deciding against using the paper due to these concerns.

Innovative Dataset Search Tool for HF:

- Introduction of a new tool, holdingfaceSEARCH, for dataset searching and local embeddings for RAG tasks.

- Discussion on its flexibility and prompting of dataset handling techniques.

Groq and The Future of Custom Chips:

- Exploration of Groq's potential in challenging Nvidia's dominance in AI hardware.

- Debate on competitive landscape and future of LPUs.

Microsoft and OpenAI's Mega Data Center:

- Collaboration on Stargate project, costing over $100 billion for AI ambitions.

- Discussions on environmental and logistical impacts of the project.

Anthropic's Market Strategy Compared to OpenAI:

- Positive feedback on Anthropic's approach contrasting it with OpenAI.

- Humorous remark and insights into industry dynamics and strategic shifts.

Modular (Mojo 🔥)

- Mojo Marathons kick off!: A monthly competition hosted by @Benny-Nottonson challenges participants to create an optimized matrix multiplication algorithm using Mojo.

- Benchmarking suggestions for better precision: Recommendations to use stdlib benchmark module for precise results in Mojo Marathons challenge.

- Compilation issues with main.mojo: Clarification that errors compiling main.mojo in the July challenge may be due to using the wrong Mojo build.

- Matrix multiplication function output issue: Confirmation of incorrect output with an AssertionError encountered while comparing matrix multiplication results.

LangChain AI & Community Update

- Discussion on using .astream_events() to stream LangGraph state and manage state continuity using thread IDs.

- Streaming, Checkpointing in LangGraph explained. Checkpoints retain previous states for access and managing state transitions.

- RAG vs Semantic Similarity Example Retrieval differences highlighted with potential combined applications.

- LangGraph State Management Techniques covered methods for accessing LangGraph state post-execution.

- Additional community tools like LangFlow and Conceptual guides were mentioned.

OpenAccess AI Collective (axolotl) General Help

A member inquired about consistent training prompt formats, which was confirmed by another member. Issues with loading errors for phi-3-small models were discussed, with parameters like warmup_steps and warmup_ratio being clarified. Additionally, a member faced a Tiktoken import error while working on an offline setup due to network unavailability, causing a requests.exceptions.ConnectionError. These discussions showcase a supportive environment where members help troubleshoot and provide valuable feedback.

Eleuther General and Research Discussions

The Eleuther general and research discussions include various topics such as leaderboard benchmark delays due to computing resources, discussions about compatibility and performance of different models like Im-Eval and HF queue issues, and the discovery of the erasure effect in token representations. The research section covers advancements in RL agents like $ abla$-IRIS, improvements in optimizers like Adam-mini cutting memory usage, and the proposal of Flora for high-rank updates. The section also explores the challenges in token representations and the positioning problem with RoPE-before-QK. Additionally, the LAION channel discusses skepticism about the 27B model, struggles with ChatGPT models, and the preference for Gemini 1.5 Pro over ChatGPT. Users express interest in models with multilingual capabilities, and there are discussions on synthetic data creation methodologies and challenges in evaluating full-finetuned models.

LLM Finetuning (Hamel + Dan) ▷ #ankurgoyal_textsql_llmevals

Braintrust Tools for Measuring Outputs:

A user inquired about tools for measuring the output of tool calls and complex agentic tool calling workflows. They were directed to the Braintrust cookbook recipes for details on these tools.

- The user thanked for the information, mentioning the ease of debugging and integrating data, tasks, and scoring bits in Braintrust via

Eval(). They expressed their enjoyment of the tool's UX and helpfulness.

Real-time Observability via Logs Tab:

The user asked about real-time observability and whether the 'Logs' tab is used for this purpose, pointing to a need for documentation or cookbook references. They were referred to the general docs for logs and a specific cookbook for using logs and evals together.

- It was clarified that the tracing set up for evaluations will work for logs as well, and the Logs tab in the Braintrust UI would automatically update in real-time. The documentation also covers logging interactions, debugging customer issues, and capturing user feedback.

Human Review Feature:

There was a brief inquiry about the Braintrust 'human review' feature and whether it applies to logs, experiments, or both. It was confirmed that the 'Trace' datastructure is identical across experiments and logs, making the human review feature applicable everywhere.

- A new feature for human review in datasets was also mentioned, enabling the integration of human feedback from diverse sources. More details were provided in the Braintrust guide for human review.

AI Community Discussions

- Cohere Support and Resources: Cohere offers resources for integrating LLMs with other AI frameworks like reinforcement learning.

- Coral API Rate Limits: Users express frustration over rate limits on Coral trial keys, recommending upgrade to production keys for higher throughput.

- Aya-23 Model Queries: Discussion on Aya-23 model versions and confusion around the 9B model.

- AI Reasoning Enhancements by Cohere: CEO Aidan Gomez discusses efforts to enhance AI reasoning in a YouTube video.

- Support for Academic Research: GitHub repository shared for academic research development tools.

- Rig Fully Integrates with Cohere Models: Rig library now fully integrated with all Cohere models, feedback program launched for Rust developers.

- Community Notebook for Mapping Citations: Community member shares a notebook for mapping citations and documents.

- Featherless.ai Platform Launch: Featherless.ai launches a new platform offering access to models on Hugging Face.

- Event Recording and Data Talks Club LLM Zoomcamp: Details about a missed event recording and an upcoming Zoomcamp on building data pipelines.

Subscription Form and Social Networks

This section of the webpage contains a subscription form for the AI News newsletter. Users can input their email address and click 'Subscribe' to join the mailing list. Additionally, there are links to the AI News Twitter account and newsletter. The footer also provides links to AI News on different social networks. The newsletter is powered by Buttondown, a platform for starting and expanding newsletters.

FAQ

Q: What are some advancements in AI models and architectures mentioned in the essai?

A: Advancements in AI models and architectures mentioned include Gemma 2 model family's advancements, Block Transformer architecture improvements, Deepseek v2's performance on powerful CPUs, Sliding Window Attention feature in Gemma 2, Stability.ai Discord exploring challenges in training Loras and hardware requirements for running Stable Diffusion, and discussions on integrating Langchain with Postgres.

Q: What are the key topics of discussions in various Discord channels related to AI technology and community engagement?

A: Key topics of discussions include model training, optimization techniques, new AI models like Gemma 2, Claude 3.5, and Persona Hub, challenges in AI applications, real-world implementations, technical advancements, community engagements, challenges with AI tools, long-term memory capabilities in AI systems, and integrating vector search into public datasets.

Q: What are some highlights from the HuggingFace community discussions mentioned in the essai?

A: Highlights include the release of LLM Compiler model by Facebook compiled by Mozilla, discussions on running llamafile on varied devices, llamafile's compatibility with Android and Google Gemma2 architecture, as well as community engagement around various topics like deep learning algorithms, biometric gait recognition, custom ML libraries for Garmin devices, and exploring Tree-Sitter s-expressions.

Q: What are some technical discussions from the CUDA MODE Discord channel mentioned in the essai?

A: Technical discussions include resolving kernel launch bottleneck with FP16, struggles with bitpacking optimization for large tensors, introducing a new FlexAttention API PR for PyTorch, identifying good first issues for CUDA contributors, and benchmarks for Nscale AMD MI300X GPUs with GEMM tuning.

Q: What are some topics covered in the Braintrust Discord related to tool calls, complex workflows, and real-time observability?

A: Topics include measuring output of tool calls and workflows using Braintrust cookbook recipes, real-time observability using the 'Logs' tab, interactions with the 'Logs' tab for evaluations, and a feature for human review in datasets and experiments.

Q: What are some specific community interactions and tools discussed in the AI News newsletter sections?

A: Community interactions include discussions around rate limits on Coral trial keys, model versions like Aya-23, efforts to enhance AI reasoning by Cohere, GitHub repository for academic research development tools, integration of Cohere models with Rig library, and sharing notebooks for mapping citations and documents.

Q: What is the primary focus of the AI News newsletter subscription form and links provided on the webpage?

A: The primary focus is on subscribing to the AI News newsletter for updates, accessing AI News Twitter account and newsletter, and engaging with AI News on various social networks like Twitter, LinkedIn, and Facebook.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!