[AINews] Rombach et al: FLUX.1 [pro|dev|schnell], $31m seed for Black Forest Labs • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap - Google's Gemma 2 Release and Ecosystem

AI Model Releases and Community Discussions

Latent Space Discord

Cognitive Processes in Web Searches Using LLM-Driven Agents

HuggingFace AI Finds and Developments

Costs and Initiatives Discussion

OpenAI ▷ #torch

Cuda Mode Discussions

Stability.ai (Stable Diffusion) Announcements

Discord Interactions Highlights

LlamaIndex AI Discussions

Modular (Mojo 🔥) General Discussion

Issues and Solutions in Development

Tinygrad, LAION, Torchtune, and MLOps @Chipro Discussions

AI Twitter Recap

AI Twitter Recap

-

Google DeepMind released Gemma 2, a new family of open-source AI models, including a 2 billion parameter model (Gemma-2 2B) that has achieved impressive performance:

- @GoogleDeepMind announced Gemma-2 2B, outperforming models 10x its size and surpassing GPT-3.5-Turbo-0613.

- @lmsysorg reported Gemma-2 2B achieved a score of 1130 on the Chatbot Arena.

- @rohanpaul_ai highlighted that Gemma-2 2B outperforms GPT-3.5 models on Chatbot Arena.

- @fchollet noted Gemma 2-2B as the best model for its size.

-

Criticisms, benchmarks, and comparisons were shared by various users:

- @bindureddy criticized Human Eval Leaderboard and GPT-4o-mini rankings.

- @Teknium1 pointed out a discrepancy in scores and MMLU performance for Gemma-2 2B.

-

Government stance and AI in coding and development were also discussed:

- US Department of Commerce issued policy supporting open-weight models.

- @Ylecun praised NTIA report endorsing open-source AI platforms.

- @Svpino discussed limitations of current AI coding tools and emphasized passive AI potential.

-

Other notable AI developments covered real-time video generation, SAMv2 for video segmentation, and faster ternary inference.

-

Memes and humor were also part of the Twitter recap.

AI Reddit Recap - Google's Gemma 2 Release and Ecosystem

AI Reddit Recap - Google's Gemma 2 Release and Ecosystem

-

Google has expanded its Gemma AI lineup with three new products: Gemma 2 2B, ShieldGemma, and Gemma Scope. The specifics of these products are not provided, but the launch indicates Google's ongoing development of AI offerings under the Gemma family.

-

Google released Gemma-2 2b, trained on 2 trillion tokens, with 4bit quantized versions available for various models. They introduced a method for 2x faster finetuning with Flash Attention v2 support for Gemma-2, along with links to resources including Colab notebooks and quantized models on Hugging Face.

-

Google has released a sparse auto-encoder tool for interpreting Gemma 2 and 9b models, aiming to enhance interpretability, potentially encouraging transparency in AI development. Users can visualize layer activations for each token, aiding in research into various concepts like refusal removal and model lying detection. The tool also opens up possibilities for fine-tuning to promote specific themes in AI models, sparking ideas for creative AI experiences such as real-time scoring of lying probability in an interrogation game.

AI Model Releases and Community Discussions

Charts like heatmap space are gaining community interest for its potential integration into Hugging Face profiles. Crafting Semantic Parsers for Solr involves teaching a Large Language Model to interpret queries for Apache Solr. Victoria Lin noted gains in MoMa 1.4B. Decoding mechanisms like speculative decoding and Bitnet's finetuning approach are topics of discussion. The necessity of LangChain and ways to participate in a cost-free AI project are also explored. The section also covers the new multi-modal architecture Chameleon, continuous fine-tuning, and the fast-paced developments in the AI community.

Latent Space Discord

Llama 3.1 Touches Nerve in Quality Debate

- The Together AI blog spurred debate on Llama 3.1 by spotlighting variances in performance due to different implementation practices by inference providers, raising concern for model consistency. Dmytro Dzhulgakov drew the community’s attention to potential result cherry-picking and emphasized the cruciality of clear methodologies in model evaluation, igniting extensive discussion on this thread.

Sybill Secures Millions for AI-Enhanced Selling

- Sybill has secured a potent $11M Series A to refine their personal assistant AI for sales reps, with prominent backers like Greystone Ventures (announcement details). The AI sales tool spectrum is seeing a spark of innovation with Sybill's solution, cloning sales reps' voices to engineer more relevant follow-ups.

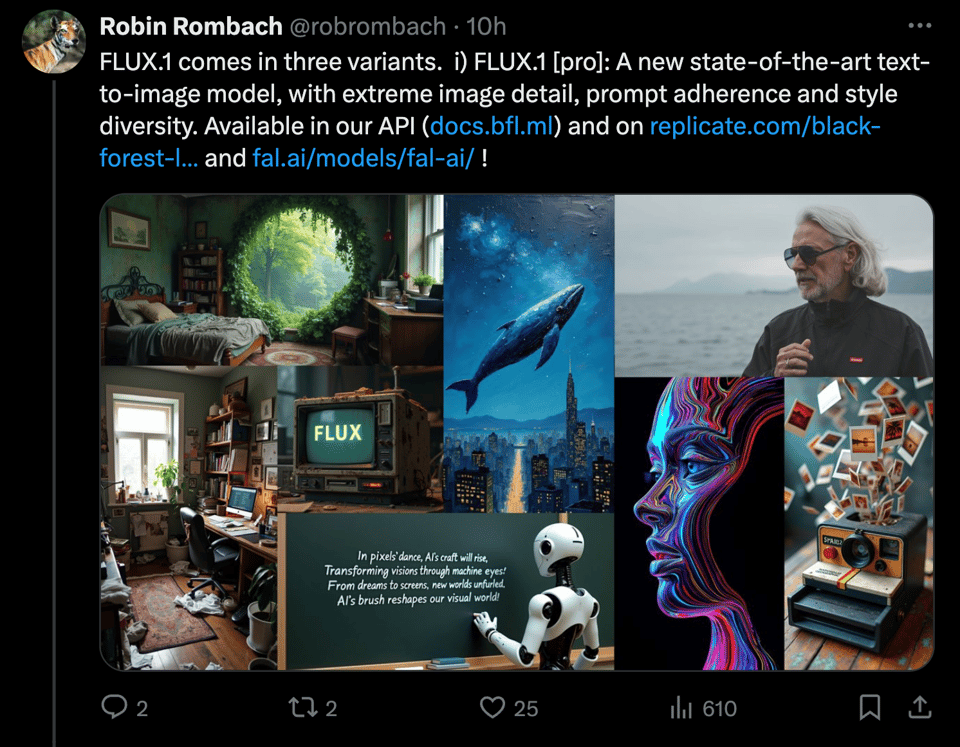

Black Forest Labs Breaks Ground with FLUX.1

- Black Forest Labs, featuring ex-Stable Diffusion wizards, debut their groundbreaking text-to-image model FLUX.1, inclusive of a robust 12B parameter version (see announcement). The pro iteration of FLUX.1 is currently live on Replicate for trials, displaying an edge over others in the space.

LangGraph Studio Unveils New Horizons for Agentic Apps

- LangChain propels IDE innovation with the launch of LangGraph Studio, built to streamline the creation and debugging of agentic applications (announcement tweet). The agent-focused IDE marries LangSmith, boosting efficiency and teamwork for developers in the realm of large language models.

Meta MoMa Transforms Mixed-Modal Modeling

- Meta's novel MoMa architecture accelerates the pre-training phase for mixed-modal language models, employing a mixture-of-experts approach (accompanying paper). The architecture is tailored to juggle and make sense of mixed-modal sequences effectively, marking a step forward in the domain.

Cognitive Processes in Web Searches Using LLM-Driven Agents

The study addresses challenges in information seeking and aims to enhance modern search-assisted models. Topics discussed include enthusiasm for NVIDIA receiving public funds, maintenance of professional dialogue in the community, issues with GPT-2 performance, discussions on optimizing embeddings in tinygrad, challenges with function complexities, impressive feats of ChatGPT in various languages, launch of Black Forest Labs and FLUX.1 model, experiments with activation functions, debates on regularization techniques, a webinar on enhancing recommendation systems with LLMs and generative AI, workshop on construction of robust ELT pipelines, emphasis on NLP and genAI in conferences, and a debate on the ROI of genAI.

HuggingFace AI Finds and Developments

This section discusses various exciting developments within the HuggingFace AI community. Topics range from the unveiling of a new Object Eraser model and the Evolution of AI bots article to Understanding Knowledge Distillation. Additionally, innovative projects such as TinyML bird detection and Infinite Sands utilizing generative AI are highlighted. The section also covers the launch of the Ai + i podcast series for discussing AI models, along with a Model Release Heatmap space gaining attention. Members also engage in discussions related to LLM models for PDF table and checkbox detection, training LLM models for Solr search queries, and building AI systems for aphasic patients. Moreover, ongoing debates and interactions occur in different channels such as diffusion-discussions, general discussions, and ask-about-LLMs, exploring topics like speculative decoding, dynamic memory systems, and Bitnet's finetuning method.

Costs and Initiatives Discussion

- Another member confirmed that the project doesn't cost anything and suggested following the steps in a pending PR.

- A participant mentioned the project is cost-free; the next steps involve instructions upon new PR submission.

- Users confirmed multi-GPU training success but noted earlier installation challenges, requiring troubleshooting setups and creating a new environment.

- Clarifications were provided on Unsloth Crypto Runner, emphasizing AES/PKI-based cryptography and GPU utilization.

- Success in finetuning Qwen with Continuous Fine-tuning Without Loss, members excitedly suggesting writing a tutorial for documentation clarity.

- Discussion on merging LoRA adapters and 4-bit models raised concerns about upscaling 4-bit models to 16-bit, potentially propagating fake 16-bit models in the community.

- User Nisten's hacking of Bitnet for finetuning resulted in a 74MB model running at 198 tokens per second on 1 CPU core, described as 'witchcraft' and set to be open-sourced via Skunkworks AI.

OpenAI ▷ #torch

A member was unable to run the video predictor example notebook from sam2. Despite trying various changes on their end, they could not get it to work and sought community advice.

The same member found a Google Colab notebook that works with their configuration. They thanked the contributor on the relevant GitHub issue for providing a solution.

Cuda Mode Discussions

This section covers various conversations in Discord channels related to CUDA mode discussions. The interactions range from Google Colab examples to detailed discussions on hardware design choices, deep learning techniques, and fine-tuning operations. Key topics include the AMPere A100 SM organization, speculation on hardware design choices, QAT flow for PyTorch model accuracy improvement, RoPE scaling, and the debate between .py and .ipynb usability. Additionally, there are insights shared on GELU optimization, implementation issues, reference models like TorchChat, and discussions on utilizing L2 latency as a hyperparameter.

Stability.ai (Stable Diffusion) Announcements

Stability AI has launched Stable Fast 3D, a model that can create detailed 3D assets from a single image in just 0.5 seconds. The model includes features like UV unwrapped mesh, material parameters, and albedo colors. Additionally, the model offers quad or triangle remeshing options with minimal processing time. Users can watch a video for detailed model improvements. Stable AI's Creative Upscaler was also discussed, clarifying that it is not a real Stability AI product. The community recommended alternative upscaling techniques using ERSGAN, transformers, and multi-stage workflows shared on community forums. The Flux model release by Black Forest Labs was highly welcomed by the community, noting significant improvements in image quality and parameter count, with exceptional results in rendering hands and fingers.

Discord Interactions Highlights

This section provides insights into various interactions and discussions from several channels on Discord. It includes discussions about the ICML Mech Int Workshop recording availability, compliments on Gemma Scope progress, issues raised within lm-eval benchmarking, recent industry news such as xAI's acquisition rumors, the launch of Gemini 1.5 Pro by Google, Black Forest Labs' announcements, GitHub Models launch, controversies around Together AI's evaluation methods, and recent innovations and advancements in the AI industry.

LlamaIndex AI Discussions

Async functionality now in BedrockConverse: Async methods for BedrockConverse LLM have been implemented, resolving issues #10714 and #14004. This contribution enhanced user experience.

LongRAG paper simplifies long-context LLMs: The LongRAG paper proposes indexing and retrieving larger document chunks to better utilize long-context LLMs. This approach aims to ease the retriever’s tasks, enhancing the RAG process.

@llama_index introduces workflows: Workflows enable event-driven multi-agent applications, allowing agents to subscribe to and emit events. This new approach offers a readable and Pythonic way to build complex orchestration.

Modular (Mojo 🔥) General Discussion

- Mojo lacking explicit thread support: Members discussed the lack of thread support in Mojo, with confirmation that it does not currently expose thread support to users. However, using fork() and getting threads through that method is tolerated in the compiled version.

- MAX and Mojo packaging changes: Version 0.9 of the modular CLI introduced changes to MAX and Mojo packaging, eliminating the need for authentication to download MAX and Mojo. Additionally, Mojo nightly packages were merged with MAX, and a new magic CLI was introduced for easier integration into the Conda ecosystem.

- Tier chart discussion confusion: A tier chart discussion caused confusion among members, with questions about its representation and the level of abstraction it reflected. Suggestions were made to simplify by replacing the entire iceberg with a fire emoji.

Issues and Solutions in Development

This section discusses various development challenges and solutions encountered by members in different projects: Installing max on Mac M1 Max and Mojo compatibility with Python, early stopping feature in Axolotl, proposed features like output mask field in SharedGPT, documentation needs for new chat templates, bug identification in preprocessing step, usage of serverless GPUs in Inferless, and suggestions for Gemma2 models training. It also highlights discussions on self-adapting AI agents from Microsoft Research, Agent Zero debut, and new advancements in LLMs with meta-rewarding. Members are also excited about potential integrations like DSPy with a symbolic learner and address issues with LiteLLM proxies for non-OpenAI models. The section also includes announcements for an official job board setup and bounties for tutorial blog posts in DSPy community.

Tinygrad, LAION, Torchtune, and MLOps @Chipro Discussions

This section covers discussions in various channels including topics like GPT-2 slowdown in Tinygrad, bounties for embeddings, code complexities, FLUX.1 model launch in LAION, activation functions experimentation, and code errors in LAION research. It also delves into model performance, debugging tips, and quantization APIs in Torchtune, as well as Data Phoenix's webinar on recommendation systems and an ELT workshop with dlt in MLOps @Chipro. Lastly, LangSmith credit accessibility issues are highlighted in the LLM Finetuning channel.

FAQ

Q: What is Gemma-2 2B in the context of AI models?

A: Gemma-2 2B is a new family of open-source AI models released by Google DeepMind, featuring a 2 billion parameter model that has achieved remarkable performance surpassing larger models like GPT-3.5-Turbo-0613.

Q: What are some key features of Google's Gemma AI lineup expansion?

A: Google's Gemma AI lineup expansion includes products like Gemma 2 2B, ShieldGemma, and Gemma Scope. Gemma-2 2B is trained on 2 trillion tokens with 4bit quantized versions available, and it supports Flash Attention v2 for 2x faster finetuning.

Q: What tool did Google release for interpreting Gemma 2 and 9b models, and what are its aims?

A: Google released a sparse auto-encoder tool for interpreting Gemma 2 and 9b models, aiming to enhance interpretability and encourage transparency in AI development. This tool enables visualization of layer activations for each token, aiding in research and fine-tuning.

Q: What recent advancements were made by Black Forest Labs in the AI field?

A: Black Forest Labs launched the groundbreaking text-to-image model FLUX.1, which includes a robust 12B parameter version. This model has shown significant improvements in image quality and rendering, particularly in the detailed representation of hands and fingers.

Q: What significant development did LangChain introduce with LangGraph Studio?

A: LangChain introduced LangGraph Studio, an agent-focused IDE designed to streamline the creation and debugging of agentic applications. This tool marries LangSmith technology, enhancing efficiency and teamwork for developers working on large language models.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!