[AINews] Reflection 70B, by Matt from IT Department • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

Discord Community Updates

Views and Memory Challenges

Discussions on Model Training and Performance Analysis

Unsloth AI Interactions

Exploring AI Code Editors and Tools for Code Automation

OpenInterpreter General Discussions

Discussion on Beta Testing, App Performance, and App Expansion

Job Openings at Liquid AI

Perplexity AI Updates

LangChain AI

Gorilla LLM (Berkeley Function Calling) & Alignment Lab AI Discussion

AI Twitter Recap

AI Twitter Recap

- The recap includes information about LLM Training & Evaluation and Multi-Modal Models:

AI Discord Recap

-

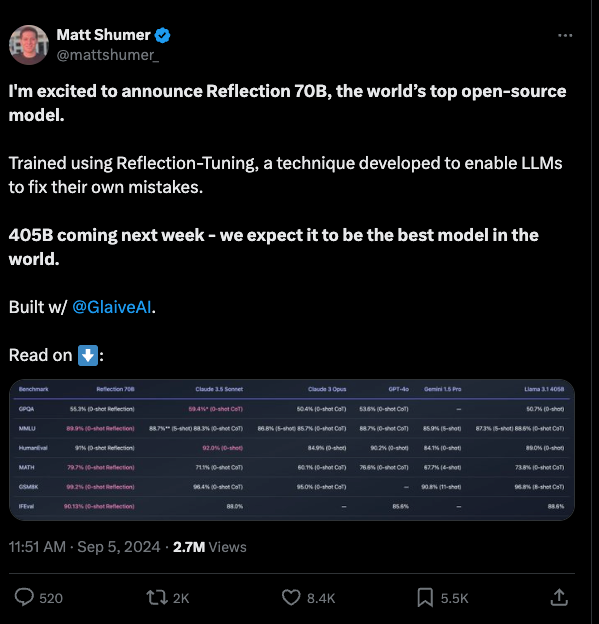

Reflection 70B Makes Waves: Announced as the world's top open-source model, Reflection 70B utilizes a new Reflection-Tuning technique that enables self-correction of reasoning mistakes.

- Initial excitement was followed by mixed results on benchmarks like BigCodeBench-Hard, sparking debates on evaluation methods and synthetic training data impact.

-

DeepSeek V2.5 Enters the Arena: Combining DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724 strengths to enhance writing, instruction-following, and human preference alignment.

- Community interest in comparing DeepSeek V2.5's performance for coding tasks against recent models like Reflection 70B showcases rapid field advancements.

-

Speculative Decoding Breakthrough: Together AI achieved up to 2x improvement in latency and throughput for long context inputs with speculative decoding, challenging previous assumptions.

- Potential reduction in GPU hours and costs for AI solution deployment.

-

AdEMAMix Optimizer Enhances Gradient Handling: Proposed optimizer AdEMAMix using a mixture of two Exponential Moving Averages for better past gradient handling than single EMA methods.

- Outperforms single EMA methods in language modeling and image classification tasks, promising efficient training for various AI applications.

-

llama-deploy Streamlines Microservices: Launched to facilitate microservices deployment based on LlamaIndex Workflows, marking a significant evolution in agentic system deployment.

- An open-source example demonstrates full-stack capabilities by building an agentic chatbot system using llama-deploy with the @getreflex front-end framework.

-

SmileyLlama: AI Molecule Designer: Introduced to design molecules based on specified properties, showcasing Axolotl's adaptation of existing Chemical Language Model techniques for specialized tasks.

- Pushes boundaries of AI applications in chemistry.

Discord Community Updates

The Discord community updates include a variety of discussions and insights from different AI-related channels. Some highlights include celebrations for Unsloth AI's Y Combinator approval, challenges faced by LM Studio with hardware compatibility, and Reflection 70B model struggles on benchmarks. Nous Research AI saw discussions on API usability and challenges with quantization techniques. OpenAI Discord shared feedback on tools and giveaways. Latent Space discussions ranged from AI code editors to text-to-music models. Eleuther Discord featured talks on academic roles and new optimizer techniques. Modular Mojo covered topics like async functions and MLIR integration. CUDA MODE shared insights on model launches and NVIDIA's education initiative. Interconnects discussed benchmark challenges and synthetic data concerns. Perplexity AI Discord covered tech industry entry tips and comparisons with other platforms. And tinygrad Discord explored bounties, pricing changes, and operation clarifications.

Views and Memory Challenges

Members expressed ongoing confusion regarding the realization of views in the _recurse_lb function, questioning optimization and utilization balance. This reflection underscores the need for clarity on foundational tensor view concepts, inviting community input to refine understanding.

Discussions on Model Training and Performance Analysis

The section covers various discussions and insights shared in the HuggingFace general channel related to model training, performance analysis, pre-training, data handling, and code generation evaluations. Contributors discussed the benefits of selective fine-tuning for language models to achieve improved performance without extensive retraining. They also delved into topics such as challenges in model training setup, insights on pre-training and data quality, generation scripts, model testing, and reflections on model evaluation metrics. Additionally, links to related resources and papers were shared for further exploration.

Unsloth AI Interactions

Members engaged in various discussions about Unsloth AI, spanning from congratulating the team on Y Combinator backing to exploring hardware compatibility issues. Conversations included recommendations for models in synthetic data generation, concerns about reflection model performance, and challenges faced by Mac users. The section also touched on off-topic conversations about the evolution of Unsloth, emoji communication, and app promotion. Links mentioned in the interactions provided additional resources for further exploration.

Exploring AI Code Editors and Tools for Code Automation

Members of the Latent Space AI-in-Action Club discussed various AI code editors and tools for code automation. Some of the tools mentioned include Melty and PearAI App. The conversation also covered topics like handling errors in engineering, collaboration with AI, and fine-tuning models.

OpenInterpreter General Discussions

Members of the OpenInterpreter community celebrated the platform's birthday, expressing excitement about its potential in AI-human interaction. They discussed the experimental nature of the skills functionality in OI and inquired about the persistence of skills across sessions. Feedback on the 01 app performance and availability of the Fulcra app were also topics of discussion. Additionally, beta testing for OI was mentioned, indicating ongoing development and potential enhancements for users.

Discussion on Beta Testing, App Performance, and App Expansion

Users provided feedback on various apps and initiatives, including the 01 app's impressive performance in searching and playing audio files efficiently. The Fulcra app expanded to new regions, responding to user requests. Community members showed interest in beta testing for the Open Interpreter, reflecting an engaged user base. Links related to these discussions were also shared.

Job Openings at Liquid AI

Beginners Explore Image Convolution Optimizations:

- A member shared their experimentation with optimization techniques for improving image convolution, highlighting constant memory use and unexpected register behavior. Local memory usage reduced the constant load, challenging the member's understanding of memory access patterns.

Control Divergence vs Arithmetic Discussions:

- The community analyzed the performance implications between control divergence in CUDA, with one member favoring option 1 due to compiler optimizations and fewer global memory accesses. Conversely, another pointed out that option 2 struggles with automatic coalescence, complicating its efficiency.

Exploring Google Triton for Training:

- A member expressed their excitement about the Google Triton group and a YouTube lecture on efficient Triton kernels for LLM training. They plan to delve into tutorials and contribute to the community in the forthcoming weeks.

Perplexity AI Updates

Perplexity AI has announced several updates, including Sutskever's SSI securing a $1 billion funding, Volkswagen integrating ChatGPT into its systems, unveiling of biohybrid mushroom robots, announcement of NFL 2024 season kickoff, and exploration of vehicle-to-everything tech. Additionally, there are job openings in the Web3 innovation team and discussions on memory usage in pplx-api and Gemma 2 model integration in Azure within the Discord channels.

LangChain AI

A member is facing challenges deploying their ReAct agent on GCP using FastAPI since the local SQLite database disappears on redeploys. They are seeking alternatives, specifically for Postgres or MySQL implementation as a replacement. The discussion also includes clarification on the usage of callbacks in LangChain and an inquiry on using Cerebras in conjunction with LangChain. Additionally, there is an exploration of decoding streams from .astream_events() and its challenges.

Gorilla LLM (Berkeley Function Calling) & Alignment Lab AI Discussion

The Gorilla LLM (Berkeley Function Calling) discussion involves exploration of XLAM's system prompts and design choices, as well as contributing to the Gorilla leaderboard through testing API servers and adding new models. On the other hand, the Alignment Lab AI general discussion briefly includes a greeting message.

FAQ

Q: What is LLM Training & Evaluation?

A: LLM Training & Evaluation refers to the process of training and evaluating Large Language Models, such as those used in AI applications, for tasks like natural language processing.

Q: What is the Reflection 70B model and its unique feature?

A: The Reflection 70B model is an open-source model known for its Reflection-Tuning technique, which enables self-correction of reasoning mistakes. It has been recognized as the world's top open-source model.

Q: What is speculative decoding and its benefits?

A: Speculative decoding is a technique that has improved latency and throughput for AI systems dealing with long context inputs. This innovation challenges previous assumptions and could potentially reduce GPU hours and deployment costs.

Q: How does the AdEMAMix optimizer improve gradient handling?

A: The AdEMAMix optimizer uses a mixture of two Exponential Moving Averages for better past gradient handling compared to single EMA methods. It has shown promising efficiency in training for various AI applications like language modeling and image classification.

Q: What is llama-deploy and its significance in microservices deployment?

A: llama-deploy is a tool launched to streamline microservices deployment based on LlamaIndex Workflows. It represents a significant evolution in agentic system deployment and demonstrates full-stack capabilities with examples like building agentic chatbot systems.

Q: What is SmileyLlama and its purpose in AI applications?

A: SmileyLlama is an AI Molecule Designer that utilizes Axolotl's adaptation of existing Chemical Language Model techniques to design molecules based on specified properties. This showcases the expansion of AI applications in chemistry.

Q: What are some of the discussions and insights shared in the HuggingFace general channel?

A: Members of the HuggingFace general channel discussed topics related to model training, performance analysis, pre-training, data handling, and code generation evaluation. They also explored selective fine-tuning for language models and challenges in model training setup.

Q: What were the updates announced by Perplexity AI, and what were the discussions surrounding them?

A: Perplexity AI announced updates like Sutskever's SSI securing $1 billion funding and Volkswagen integrating ChatGPT into its systems. Additionally, members discussed job openings, memory usage in pplx-api, and Gemma 2 model integration in Azure within the Discord channels.

Q: What are some of the challenges discussed regarding deployment in the AI community?

A: Challenges discussed include deploying the ReAct agent on GCP using FastAPI, local database persistence issues, and exploring alternatives like Postgres or MySQL implementation. There were also inquiries about using Cerebras and decoding streams from .astream_events().

Q: What were the key topics explored in the discussions regarding Google Triton for training?

A: Members expressed excitement about the Google Triton group and efficient Triton kernels for LLM training. They planned to delve into tutorials and contribute to the community in the future.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!