[AINews] o1 destroys Lmsys Arena, Qwen 2.5, Kyutai Moshi release • ButtondownTwitterTwitter

Chapters

Twitter and Reddit AI Recaps

Quantization and New AI Models

Integrating HuggingFace and Their New API Enhancements

Clarifying SIMD Data Types

Discussions on AI Models and Automations

AI Venture Updates and Reasoning Dataset

Exploring Training Compute for LLMs

AI Model Discussions and Updates

Discussing Latest AI Models and Tools

DSpy, Elixir Session, User Requests, and Links

Twitter and Reddit AI Recaps

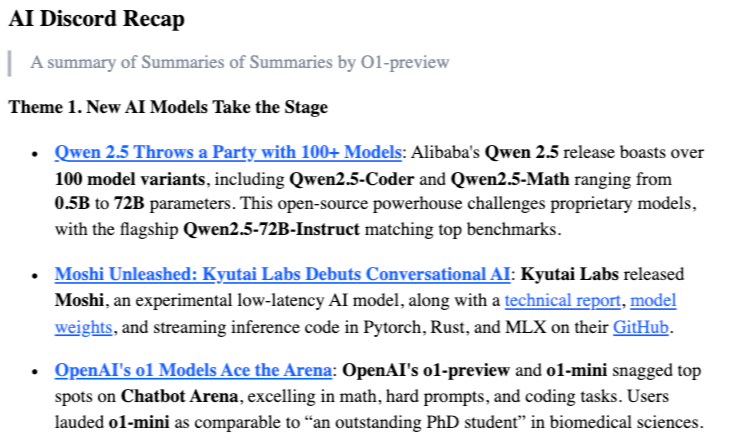

This section provides a comprehensive recap of the latest updates and releases in the AI community as shared on Twitter and Reddit. Starting with notable model updates like OpenAI's o1 models and Mistral AI's Pixtral, the recaps cover various AI development tools, research findings, educational resources, and AI applications. From advancements in energy-efficient CPU backend integration to new LLM books and innovative AI product commercials, the recaps offer insights into the evolving landscape of artificial intelligence.

Quantization and New AI Models

Users showed excitement for the potential of BitNet, and a humorous reference was made to 'THE ULTIMATE QUANTIZATION' concept. Qwen2.5-72B-Instruct model demonstrated strong performance on the LMSys Chatbot Arena, excelling in coding and math tasks with strict content filtering. Mistral-Small-Instruct-2409, a new 22B parameter model from Mistral AI, was released with enhanced instruction-following capabilities. The AI community discussed advancements in Vision Language Models (VLMs), including new benchmarks and potential applications. OpenAI's o1 model and Gemma 2 faced challenges with text-based chain of thought reasoning. The community also shared insights and discussions on AI model advancements, multimodal learning, and AI applications across various discord channels.

Integrating HuggingFace and Their New API Enhancements

- HuggingFace has unveiled new API documentation that includes improved clarity on rate limits, a dedicated PRO section, and enhanced code examples. User feedback has been directly applied to enhance usability, making AI deployment smoother for developers.

- TRL v0.10 simplifies fine-tuning for vision-language models down to two lines of code, highlighting the increasing connectivity of multimodal AI capabilities.

- Nvidia has launched the Mini-4B model that boasts remarkable performance but requires compatible Nvidia drivers. Users are encouraged to register it as a Hugging Face agent to leverage its full functionality.

- An open-source Biometric Template Protection (BTP) implementation has been shared for authentication without server data access, aiming to introduce newcomers to secure biometric systems.

- The community is seeking a space model that can convert images into high-quality cartoons, promoting shared insights and recommendations for fulfilling this requirement.

Clarifying SIMD Data Types

The conversation emphasized the need to understand SIMD data types for smooth conversions, encouraging familiarization with DType options. Members noted that this knowledge could streamline future queries around data handling.

Discussions on AI Models and Automations

Discussions in this section revolve around various AI models, automation methods, and integration of new features. Users share their experiences and insights related to experimental coding methods, tool functionalities, and AI model updates. The community explores topics such as budgeting for API expenses, automation in coding tasks, and the integration of systems like RAG and Aider. Additionally, discussions cover curiosity about system integration, user engagement, and the comparison of specific AI models. Links to related tools, platforms, and releases are also shared and discussed.

AI Venture Updates and Reasoning Dataset

This section highlights updates on AI ventures and a new reasoning dataset derived from GSM8K. Interested users are encouraged to explore the creative AI venture through direct messaging. Additionally, a new reasoning dataset focused on logical reasoning and step-by-step processes based on GSM8K is introduced to enhance mathematical problem-solving and reasoning capabilities in models. The dataset aims to benchmark model performance through improved deductive reasoning tasks. Links related to these updates are provided for further exploration.

Exploring Training Compute for LLMs

- Compression of MLRA Keys with Extra Matrix: Members discussed the potential for compressing MLRA keys and values using an additional compression matrix post-projection. Questions arose about the lack of details in the MLRA experimental setup, including specifics on rank matrices.

- Introducing Diagram of Thought Framework: A framework called Diagram of Thought (DoT) was introduced, which models iterative reasoning in LLMs using a directed acyclic graph (DAG) structure, allowing for complex reasoning pathways. This approach aims to improve logical consistency and reasoning capabilities over traditional linear methods.

- Investigation into Low-Precision Training: Experiments using very-low-precision training showed that ternary weights require 2.1x more parameters to match full precision performance, while septernary weights show improved performance per parameter. Members pondered the availability of studies on performance vs. bit-width figures during training quantization.

- Playground v3 Model Achieves SoTA Performance: Playground v3 (PGv3) was released, demonstrating state-of-the-art performance in text-to-image generation and introducing a new benchmark for evaluating detailed image captioning. It fully integrates LLMs, diverging from traditional models that rely on pre-trained language encoders.

- Challenges in Evaluating LLM Outputs: There was a discussion on developing systematic evaluation methods for LLM outputs, proposing the use of perplexity on human-generated responses as a potential metric. Participants highlighted that numerous existing papers cover similar challenges, emphasizing the importance of not overly narrowing evaluation criteria.

AI Model Discussions and Updates

This section provides insights into various AI model discussions and updates across different channels on Discord. It includes discussions on self-promotion rules, GPT Store creations, language barriers in communication, GPT linking policies, Job application process, CoT-reflections, O1's reward model, cost efficiency in local experiments, CoT training strategies, Qwen 2.5 release, Mistral's free tier, Moshi Kyutai model release, Mercor's funding, Torchtune 0.3 release, FSDP2 integration, and more. The interactions highlight a range of topics from AI model performances to technical announcements and community discussions.

Discussing Latest AI Models and Tools

The chunk explores discussions on recent advancements in AI models and tools. It covers various topics such as the impact of Transformer architecture on AI, attention visualization with BertViz, and the limitations of GDM's Large Language Models. The section also delves into the development of new models like Moshi, RAG applications with AWS, and infrastructure setup using AWS CDK. Additionally, it mentions the challenges faced by developers in integrating Langserve with React frontend and Python backend. Overall, the conversations highlight the continuous exploration and development in the AI field.

DSpy, Elixir Session, User Requests, and Links

In this chunk, users expressed eagerness to engage with the content, especially related to costs in AI systems. A new LanceDB integration for DSpy was announced, inviting collaboration from contributors. A live coding session on Elixir templates and projects was happening in the lounge, with a Discord link shared for participation. A user requested a working O1 example using typed predictors, seeking assistance from the community. Furthermore, various links were mentioned, including a podcast episode discussing the cost of running AI systems and a GitHub pull request for LanceDB integration.

FAQ

Q: What are some notable AI models and updates discussed in the essai?

A: Some notable AI models and updates discussed in the essai include OpenAI's o1 models, Mistral AI's Pixtral, BitNet, Mistral-Small-Instruct-2409, Qwen2.5-72B-Instruct, Gemma 2, Vision Language Models (VLMs), TRL v0.10, Mini-4B model by Nvidia, Playground v3 (PGv3), and many others.

Q: What advancements were highlighted in Vision Language Models (VLMs) according to the essai?

A: According to the essai, advancements in Vision Language Models (VLMs) included new benchmarks, potential applications, and challenges faced by models like Gemma 2 and OpenAI's o1 model in text-based chain of thought reasoning.

Q: What new tools or resources were introduced in the AI community as mentioned in the essai?

A: The essai mentioned the introduction of new API documentation by HuggingFace, TRL v0.10 for fine-tuning vision-language models, an open-source Biometric Template Protection (BTP) implementation, and the release of Playground v3 (PGv3) model achieving state-of-the-art performance in text-to-image generation.

Q: What discussions took place around compression of MLRA keys and the Diagram of Thought Framework in the essai?

A: Discussions in the essai revolved around the potential for compressing MLRA keys and values using an additional compression matrix post-projection. Additionally, a framework called Diagram of Thought (DoT) was introduced to model iterative reasoning in LLMs using a directed acyclic graph structure.

Q: What challenges were highlighted in evaluating Large Language Models (LLMs) outputs in the essai?

A: The essai discussed challenges in evaluating LLM outputs and proposed the use of perplexity on human-generated responses as a potential metric. It emphasized the importance of developing systematic evaluation methods and not overly narrowing evaluation criteria.

Q: What topics were covered in the AI community discussions on Discord as outlined in the essai?

A: The essai mentioned discussions on self-promotion rules, GPT Store creations, language barriers in communication, GPT linking policies, job application processes, CoT-reflections, O1's reward model, cost efficiency in local experiments, Qwen 2.5 release, Mistral's free tier, Moshi Kyutai model release, Torchtune 0.3 release, FSDP2 integration, and more.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!