[AINews] not much happened today • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

AI Discord Themes and Discussions

Discord Channels Discussions

Learning through Projects and Model Challenges

Engaging Model Creators and Questions on HuggingFace Hub

LLM Compression and BitNet Training

AI Conversations and Innovations

LlamaIndex: General Chat

LM Studio Discussions

Discussion on Various Frameworks and Challenges

Discussions on Bootstrapping, MathPrompt, and TypedPredictors

AI Twitter and Reddit Recap

AI Twitter Recap

-

OpenAI's o1 Models: There is a discussion about OpenAI's o1 models causing confusion similar to early responses to GPT-3 and ChatGPT.

-

AI Model Developments: Comparison of DeepSeek 2.5 to GPT-4 and a highlight of CogVideoX's capabilities.

-

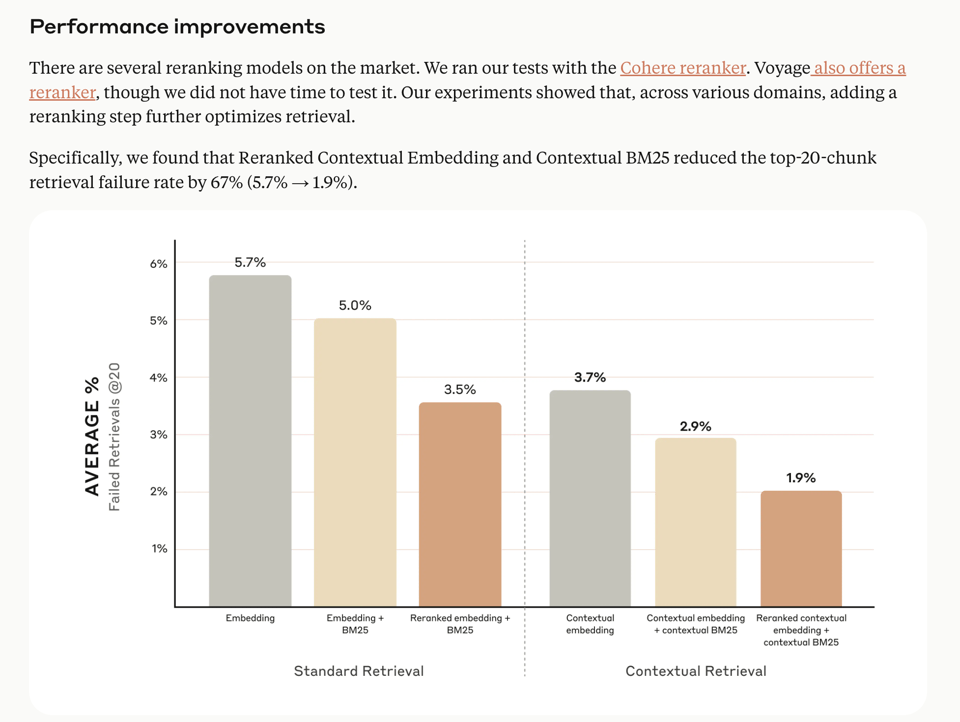

AI Research Insights: Discussion on LLMs re-reading questions to boost reasoning and Contextual Retrieval technique.

-

AI Industry and Applications: Insights on the impact of AI on enterprises and disruptions in traditional ML businesses.

-

AI Tools and Platforms: Announcements about LangGraph Templates and LlamaParse Premium.

-

AI in Production: Advice on using LLM evals to improve AI products and challenges of caching in LLM applications.

-

AI Ethics and Regulation: Discussions on scientists' political leanings and the ARC-AGI benchmark.

-

Memes and Humor: Showcasing humorous AI-generated content.

AI Reddit Recap

/r/LocalLlama Recap

-

Theme 1: Meta's Llama 3 Multimodal: Meta's next big AI release is teased to have multimodal capabilities for better AI reasoning.

-

Theme 2: Qwen2.5 32B: Impressive performance in GGUF quantization evaluation results, showing strong performance in computer science category of MMLU PRO.

AI Discord Themes and Discussions

This section covers various discussions and themes from different AI-related Discord channels. From Aider struggling with API interactions to debates over model performance and fine-tuning techniques, the summaries highlight ongoing conversations within the AI community. Additionally, insights into AGI progress, quantization trade-offs, and new multimodal models are shared, showcasing the diverse range of topics discussed in these Discord channels.

Discord Channels Discussions

This section delves into various discussions and feedback shared on Discord channels related to different AI models and platforms. Users express confusion and provide feedback on models like Perplexity Pro and o1 Mini, highlighting areas for improvement. Issues such as poor performance with certain models and inquiries about beta features are also raised. Additionally, discussions cover topics like AI consciousness, access to new features, and optimizing workflows. Each Discord channel contributes to a vibrant exchange of ideas and suggestions aimed at enhancing AI technology and user experiences.

Learning through Projects and Model Challenges

One member emphasized the value of learning through projects, mentioning that application is the best way to learn and planning to explore further after completing their capstone project. Issues with Rerank Multilingual v3 struggling with English queries were reported, affecting RAG results and requiring optimizations. A suggestion was made to test different models using a curl command for performance comparison. There was interest in newsletters, with community members attracted to the classify newsletter and expressing a desire for more continuous updates. Over in the LAION Discord, members discussed seeking speedy Whisper solutions, highlighted Whisper-TPU as a fast alternative, and explored Transfusion architecture usage. Challenges regarding diffusion and AR training stability were shared, along with inquiries about Qwen-Audio training instability. In the Interconnects Discord, discussions included the disappointment with Qwen 2.4 compared to o1-mini and concerns over the Llama multimodal launch in the EU amidst regulatory uncertainties. The perceived anti-tech stance in the EU was also discussed, calling for clearer regulations. OpenAI's extended video insights were shared, showcasing advancements in model performance compared to human capabilities. Within the LangChain AI Discord, users reported chunky output issues with LangChain v2.0, compared Ell with LangChain, sought clarification on LangGraph support, invited beta testing for a new agent platform, and requested documentation guidance on OpenAI assistants. Lastly, the OpenAccess AI Collective Discord revealed the launch of the Moshi model for speech-text conversion, achievements of GRIN MoE with minimal parameters, and concerns over the Mistral Small instruction-only release.

Engaging Model Creators and Questions on HuggingFace Hub

Engaging model creators on HF Hub

- Users are advised to pose questions on the Community tab of the HF Hub as model creators likely monitor that space for inquiries. This advice may facilitate more effective communication between users and model creators.

Exploring GPT2SP for Agile Estimation

- A member is working on their Master's thesis, aiming to improve story point estimation for Agile methodologies by experimenting with advanced models like GPT-3 and GPT-4, building on the insights from the GPT2SP paper. They're seeking recommendations for the most suitable model and insights from anyone with similar experience.

Curious Patterns in Language Sequences

- Another member observed unconventional sequences like 'll' for I'll and 'yes!do it', mentioning issues with missing spaces in words. They expressed concern about how such cases are treated in a ST embedding pipeline and the lack of existing embedding models that accommodate them.

LLM Compression and BitNet Training

Recent discussions in the Liger-Kernel channel shed light on the effectiveness of data-free compression methods for Large Language Models (LLMs), achieving 50-60% sparsity to reduce memory footprints while maintaining performance metrics like perplexity. The introduction of the Knowledge-Intensive Compressed LLM Benchmark (LLM-KICK) redefines evaluation protocols aligning with dense model counterparts. Furthermore, concerns were raised about product quantization methods and their compression ratios compared to bitnets, with debates on the effectiveness of 2-bit and 3-bit models. Members critiqued the efficiency of current quantization methods, noting slow processing times on an H100 for Llamav2-70B models. Progress on BitNet training was reported, integrating int8 mixed-precision training and modifications in quantization methods for potential improvements. Discussions also delved into memory optimization techniques using A8W2 from gemlite for memory reduction in quantization strategies, balancing memory optimization with processing speed considerations.

AI Conversations and Innovations

The discussions in this section cover various topics related to AI, including new tools, model comparisons, and practical applications. Users explore tools like video2x for upscaling videos and create AI chatbots for music production. Models like Hermes-3 and Flux are analyzed for their performance and capabilities. Members discuss the use of RAG models in specific domains like maritime law and rule-based applications. The section also includes philosophical debates on AI consciousness and the evolution of AI reasoning frameworks like Iteration of Thought. Additionally, new research papers and intriguing links are shared, highlighting advancements in the field of artificial intelligence.

LlamaIndex: General Chat

This section includes discussions related to LlamaIndex in the general chat, covering topics such as issues with Pinecone IDs, inconsistencies in Pandas Query Engine, challenges with Graph RAG queries, compatibility errors with Gemini LLM, and the integration of contextual retrieval and hybrid retrieval methods in LlamaIndex. Users share their experiences, seek solutions for technical issues, and explore ways to enhance the functionality of LlamaIndex for diverse applications.

LM Studio Discussions

This section provides insights into various discussions happening in the LM Studio community. Users discuss topics such as resolving connection issues by switching to IPv4, sharing prompts for effective AI training, debating the effectiveness of power limiting versus undervolting strategies on GPUs, exploring model initialization techniques for large language models, and more. Additionally, users express interest in API integrations, seek feedback on coding assistance tools, and participate in community meetings to enhance collaboration and knowledge-sharing.

Discussion on Various Frameworks and Challenges

This chunk of discussions covers various challenges and feature requests encountered by members while working with different frameworks. From concerns over packed structs support in Mojo to requests for features like copyinit in Set implementation and syntax for generic structs implementing multiple traits, the community explores a range of technical aspects related to their projects. Additionally, there are discussions on Torchtune, OpenInterpreter, Tinygrad, LAION, Cohere, Interconnects, LangChain AI, OpenAccess AI Collective, and more, highlighting user experiences, challenges faced, and strides made in the AI and tech development space.

Discussions on Bootstrapping, MathPrompt, and TypedPredictors

In this section, the community members discuss three topics. First, the purpose of bootstrapping in DSPy is explained, emphasizing the generation of intermediate examples within a pipeline. Second, an introduction to MathPrompt is shared with a reference to a research paper, highlighting its potential in enhancing mathematical reasoning. Lastly, tricks for handling TypedPredictors are discussed by mocking JSON parsing to improve output pre-processing, which includes removing unnecessary text and fixing invalid escape sequences.

FAQ

Q: What are some recent developments in AI model technology?

A: Recent developments include comparisons between DeepSeek 2.5, GPT-4, and highlights on CogVideoX's capabilities.

Q: What are the key topics discussed in AI Reddit Recap related to various Discord channels?

A: Key topics include discussions on model performance, AGI progress, quantization trade-offs, and new multimodal models.

Q: What insights were shared regarding AI tools and platforms in the essai?

A: Insights were shared about LangGraph Templates, LlamaParse Premium, and advice on using LLM evals and challenges with caching in LLM applications.

Q: How is AI impacting the industry according to the essai?

A: AI is impacting enterprises and disrupting traditional ML businesses, as discussed in the essai.

Q: What are some key discussions related to AI ethics and regulation in the essai?

A: Discussions include scientists' political leanings and the ARC-AGI benchmark in the AI ethics and regulation domain.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!