[AINews] not much happened today • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recaps

Innovative AI Applications and Model Developments

Hermes 70B API launched on Hyperbolic

Innovations and Discussions in Various Discord Channels

Unsloth AI Community Collaboration

Stability.ai (Stable Diffusion) Highlights

GPU Mode Discussion

Exploration of int4_mm Wrapping for torch.compile

Claude's System Prompt Enhancements and Model Discussions

Cohere Projects

LED Matrix Communication and Framework Laptop 16 Discussion

Footer Links and Information

AI Twitter and Reddit Recaps

AI Twitter Recap

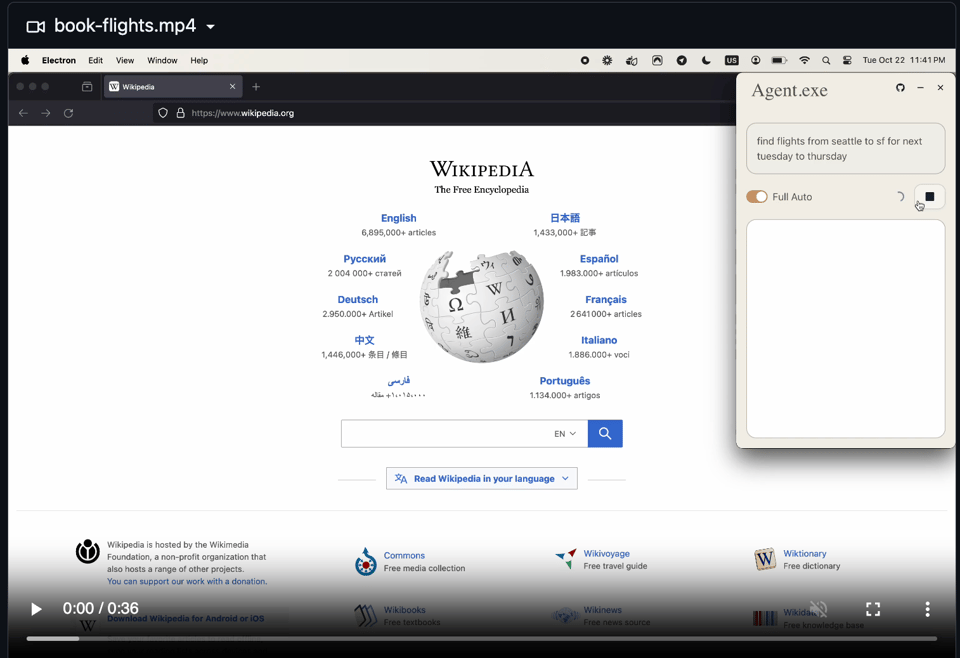

- Anthropics's Claude 3.5 release introduces a computer use capability, new models, and performance improvements. Other AI model releases and updates include Mochi 1, Stable Diffusion 3.5, Embed 3, and KerasHub.

- AI research highlights the Differential Transformer, attention layer removal findings, and RAGProbe. Industry collaborations feature Perplexity Pro, Timbaland with Suno AI, and Replit integration with Claude's computer use.

AI Reddit Recap

- /r/LocalLlama discusses major updates like Stability AI's Stable Diffusion 3.5 release with variants and improved capabilities. Users test the model and discuss its applications despite license restrictions. Anthropic's introduction of computer use, Claude 3.5 Sonnet, and Claude 3.5 Haiku is well-received, allowing AI to interact with virtual machines and perform various tasks.

Innovative AI Applications and Model Developments

The section highlights the advancements in Open Source AI Model Developments and Replication Efforts. It covers the progress in replicating OpenAI's O1 model, the introduction of Fast Apply - an open-source, fine-tuned Qwen2.5 Coder Model, and a comparison tool for LLM models. The discussions include the safety implications of AI models, versioning confusions, and potential integrations with other tools. Additionally, it delves into the release of Transformers.js v3 with WebGPU support and new models, along with the GPU Hardware discussions speculating on NVIDIA's upcoming 5090 GPU specs and pricing. The content also features AI Model Releases and Improvements from various companies, showcasing updated versions of Claude 3.5 models, Stable Diffusion 3.5 release, and the introduction of the Mochi 1 video generation model. The AI Discord recaps, Reddit discussions on AI ethics, AI model comparisons, and GPU hardware, and AI developments and research activities like fixing bugs and deepfake projects are also mentioned.

Hermes 70B API launched on Hyperbolic

The Hermes 70B API is now available on Hyperbolic, providing greater access to large language models for developers and businesses. This launch marks a significant step towards making powerful AI tools more accessible to everyone. Members expressed enthusiasm for the Forge project by Nous Research, anticipating its capabilities in leveraging advanced datasets. ZK Technology from OpenBlock's Universal Data Protocol was discussed for enhancing data provenance and interoperability in AI training. Discussions also touched on the potential risks of Claude's Computer Control capabilities for Robotic Process Automation (RPA), emphasizing the need for transparency and human oversight in AI-driven fact-checking to combat misinformation effectively.

Innovations and Discussions in Various Discord Channels

The discussion in this section covers various topics discussed in different Discord channels. It includes insights on using .yaml extensions in TorchTune, multi-GPU testing queries, fine-tuning with TorchTune for custom models, issues with linters and pre-commit hooks, resolution of CI chaos in PR, and more. Furthermore, the LangChain AI Discord section highlights opportunities for developers to participate in surveys and studies, engage with an AI-powered platform for funding, and learn about coding an AI bot for GeoGuessr. Additionally, there are discussions on the world's most advanced workflow system in DSPy Discord, FAQs about DSPy, synthetic data metrics, and model alignment recipes in the LLM Agents Discord section. The LLM Finetuning Discord section presents tools like LangSmith Prompt Hub, Kaggle solutions, and a new Discord bot for message scraping. A new experimental Triton FA support is introduced in the OpenAccess AI Collective Discord, along with a debate on Mixtral vs. Llama 3.2 models. Lastly, challenges in model evaluation and the importance of model generation in the Gorilla LLM Discord section are discussed.

Unsloth AI Community Collaboration

A pull request was made to address issues with the Unsloth studio environment, specifically related to importing Unsloth. Another PR aimed to fix a bug in the Tokenizer. These collaborations reflect the community's active involvement in improving the capabilities and functionalities of Unsloth AI.

Stability.ai (Stable Diffusion) Highlights

Participants in the Stability.ai (Stable Diffusion) channel discussed various topics including Stable Diffusion 3.5 Training and Use, Prompting Techniques for Anime Art, Image Generation Result Quality, Model Management and Organization. They emphasized the need for finetuning and community training efforts, recommended using SD 3.5 with correct prompts for generating anime art, and shared experiences with different tools like ComfyUI and fp8 models to manage AI tasks efficiently.

GPU Mode Discussion

LLM Activations Quantization

A user debates the sensitivity of activations in LLMs to input variations and the potential impact of aggressive quantization on modeling performance.

- Concerns are raised about the trade-offs of precision in quantization and its effects on model training stability.

Precision Concerns with bf16

A member expresses worries about the precision of bf16 compared to fp32, emphasizing issues like canceled updates after multiple gradient accumulations due to precision problems.

- The importance of precision in quantization affecting model training stability is highlighted.

Anyscale's Single Kernel Inference

A user shares news about Anyscale developing an inference engine enabling complete LLM inference using a single CUDA kernel, seeking input on the efficiency of this approach compared to traditional engines.

- The potential leap in efficiency is discussed and evaluated.

CUDA Stream Synchronization Inquiry

A user raises a question about the function cudaStreamSynchronize not being used for stream1 & stream2 before kernel launch in a specific CUDA code, indicating a need for clarification on CUDA synchronization techniques.

- The query underscores the significance of understanding kernel execution order.

Link mentioned: cuda-course/05_Writing_your_First_Kernels/05 Streams/01_stream_basics.cu at master · Infatoshi/cuda-course.

Exploration of int4_mm Wrapping for torch.compile

A member is curious about how int4_mm is wrapped for torch.compile support, suspecting it should use custom_op but finding it utilizes @register_meta instead. Is there any advantage of this approach vs. torch.library.custom_op? was raised, debating the role of torch._check for tensor size and dtype. Members discussed that torch.library.custom_op serves as a high-level wrapper, while some cases might benefit from the low-level API. The inquiry about using high-level APIs when direct options exist surfaced a debate on the necessity of such custom implementations. Nightly Wheels Limitations for Non-Linux Platforms: A member pointed out that nightly wheels for platforms other than Linux lack .dev versions. Interesting, mind opening an issue? was suggested as a follow-up to the observation, indicating potential concerns for broader platform support.

Claude's System Prompt Enhancements and Model Discussions

- Claude's System Prompt Enhancements: The new Claude system prompt includes corrections for the 'misguided attention' issue, showcasing improved self-reflection abilities and refined responses to logical puzzles.

- Whisper Streaming Solutions: Discussions around offline real-time Whisper-based translation frameworks like whisper_streaming and moonshine project for fast ASR on edge devices.

- Small OSS Models: Recommendations for Gemma 2 and Qwen 2.5 small OSS models and discussions on handling semi-complex JSON data.

- Llama Model Quantization: Challenges faced by users in finding Llama 3.2 quant versions and converting GGUF to safetensors using scripts for model optimization.

Cohere Projects

An Agentic Builder Day event is scheduled for November 23rd, hosted by OpenSesame and Cohere team, inviting builders to showcase skills in a mini AI Agent hackathon. Participants can apply now for a chance to win prizes. The event aims to connect talented builders in the AI community to collaborate and compete using Cohere Models, offering a unique opportunity for skill enhancement and networking.

LED Matrix Communication and Framework Laptop 16 Discussion

This section includes discussions about various topics related to LED Matrix communication and the Framework Laptop 16. Members shared their enthusiasm for joining stdlib discussions and sought help with serial communication in Mojo. There were conversations about C/C++ support in Mojo and the creation of a library for Framework Laptop 16 LED Matrix communication. Despite initial skepticism, there was openness to collaboration and improvement on projects.

Footer Links and Information

The footer section of the webpage includes links to the AI News newsletter and other social networks. Visitors can find AI news on Twitter and subscribe to the newsletter. The website is brought to you by Buttondown, offering an easy way to start and grow newsletters.

FAQ

Q: What are some of the AI model releases and updates mentioned in the essai?

A: The essai mentions releases and updates like Claude 3.5, Mochi 1, Stable Diffusion 3.5, Embed 3, and KerasHub.

Q: What industry collaborations are highlighted in the essai?

A: Industry collaborations mentioned include Perplexity Pro, Timbaland with Suno AI, and Replit integration with Claude's computer use.

Q: What advancements are discussed in the Open Source AI Model Developments and Replication Efforts section?

A: Advancements discussed include replicating OpenAI's O1 model, the introduction of Fast Apply (an open-source Qwen2.5 Coder Model), and a comparison tool for LLM models.

Q: What is Hermes 70B API, and where is it available?

A: The Hermes 70B API is available on Hyperbolic, providing greater access to large language models for developers and businesses.

Q: What is the purpose of Anyscale's Single Kernel Inference engine?

A: Anyscale's Single Kernel Inference engine enables complete LLM inference using a single CUDA kernel, aiming for efficiency compared to traditional engines.

Q: What are some challenges faced by users in the Llama Model Quantization section?

A: Users face challenges in finding Llama 3.2 quant versions and converting GGUF to safetensors using scripts for model optimization.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!