[AINews] Nemotron-4-340B: NVIDIA's new large open models, built on syndata, great for syndata • ButtondownTwitterTwitter

Chapters

NVIDIA's Nemotron-4-340B Model and Synthetic Data Pipeline

AI Discord Recap

Cohere Discord

Discord Community Highlights

Comparison to RK3588

Discussion of LM Studio Updates and Model Issues

HuggingFace ▷ #cool-finds

Perplexity AI General

LLM Finetuning (Hamel + Dan) - General Discussions

Eleuther Research Highlights

Interconnects (Nathan Lambert)

AI News Subscriptions and Social Media

NVIDIA's Nemotron-4-340B Model and Synthetic Data Pipeline

The section discusses NVIDIA's new Nemotron-4-340B model, an upgrade from Nemotron-4 15B, emphasizing its synthetic data generation process. The model relies heavily on synthetic data, with over 98% coming from a generated pipeline, showcasing its effectiveness. The section further details the alignment process, highlighting the use of human-annotated data and the success of the synthetic data in various prompts. Additionally, comparisons to other models like Mixtral and Llama 3 are mentioned, along with the release of a Reward Model version. Various Twitter recaps about AI models and architectures, such as Mamba-2-Hybrid and Samba, are also highlighted in the AI Twitter Recap subsection.

AI Discord Recap

The Discord recap covers various discussions and developments in the AI community. Discussions include processor comparisons, model performance, CUDA anomalies, and software compatibility. Community efforts include creating image bot tools, addressing anatomy accuracy in models, and improving GPU compatibility. The recap also highlights challenges and advancements in AI hardware, like wafer-scale chips and handling terabytes of data for machine learning tasks. Memes and humor are infused throughout the conversations, adding a lighter tone to the technical discussions.

Cohere Discord

Cohere Chat Interface Gains Kudos: Cohere's playground chat was commended for its user experience, particularly citing a citation feature that displays a modal with text and source links upon clicking inline citations.

Open Source Tools for Chat Interface Development: Developers are directed to the cohere-toolkit on GitHub as a resource for creating chat interfaces with citation capabilities, and the crafting-effective-prompts documentation for effective prompting.

Discord Community Highlights

This section highlights discussions and updates from various Discord communities related to AI and machine learning technologies. It covers a range of topics including troubleshooting RAG chain outputs, crafting JSON objects, and integrating LangChain with pgvector. Members engage in discussions around NVIDIA's Nemotron models, collaborative problem-solving, and the development of AI technologies. The section also showcases projects like Dream Machine animation, advancements in AI training tools, and PostgreSQL extensions. Community members delve into model performance, architecture limitations, and the balance between model size and efficiency in multilingual AI development.

Comparison to RK3588

Another member compared the performance of the MilkV Duo to the RK3588 ARM part, which boasts 6.0 TOPs NPU and various advanced features. They provided a link to the specifications of RK3588.

Discussion of LM Studio Updates and Model Issues

In this section, various discussions related to LM Studio updates, model issues, and community collaborations are highlighted. The topics include troubleshooting installation issues, GitHub PR fixes for Llama.cpp, debates on model performance, Python version requirements for Unsloth, and the importance of CUDA libraries for local model execution. Additionally, hardware discussions, model announcements, and beta releases chats are also covered, providing insights on RAM vs VRAM utilization, new model releases, and issues with specific models and versions.

HuggingFace ▷ #cool-finds

New GitHub playground for OpenAI:

- A member introduced their new project, the OpenAI playground clone. It's available on GitHub for contributions and experimentation.

Hyperbolic KG Embeddings paper shared:

- A paper on hyperbolic knowledge graph embeddings was shared, emphasizing that the approach 'combines hyperbolic reflections and rotations with attention to model complex relational patterns'. You can read more in the PDF on arXiv.

OpenVLA model highlighted:

- A paper about the OpenVLA, an Open-Source Vision-Language-Action Model, was shared. The full abstract and author details can be found in the arXiv publication.

Introducing QubiCSV for quantum research:

- A new platform called QubiCSV, aimed at 'qubit control storage and visualization' for collaborative quantum research, was introduced. The detailed abstract and experimental HTML view are available on arXiv.

Perplexity AI General

Perplexity Servers Down; Confusion Ensues:

- Users reported issues with Perplexity servers, facing repeated messages and endless loops, causing frustration. Lack of communication from Perplexity's team was highlighted.

File Upload Issues Identified:

- A broken file upload feature causing performance problems was identified, affecting users with a certain AB test config.

404 Errors on Generated Links:

- Concerns were raised over Perplexity generating incorrect or non-existent links, possibly due to the LLM making up URLs.

Android App Inconsistencies:

- Inconsistencies were noted with the Perplexity Android app, leading to periodic resending of requests.

Pro Subscription Concerns:

- Doubts were expressed about upgrading to Perplexity Pro due to ongoing issues and poor communication from support, with users questioning its value.

LLM Finetuning (Hamel + Dan) - General Discussions

In this section, various topics related to LLM Finetuning are discussed. Members share about breakthroughs in Lamini Memory Tuning, interest in training HiFi-GAN for XTTS, seeking help for LLM-based chapter teaching, and improved training for binary neural networks. The discussions cover innovative methods, training experiences, teaching applications, and algorithm advancements in the field of LLM Finetuning.

Eleuther Research Highlights

Eleuther ▷ #research (37 messages🔥):

- KANs could simplify implementation on unconventional hardware: Members discussed potential advantages of KANs (Kriging Approximation Networks) over MLPs (Multilayer Perceptrons) for implementation in analog, optical, and other 'weird hardware,' pointing out that KANs only require summation and non-linearities rather than multipliers.

- Training large language models to encode questions first: A shared paper suggested that exposing LLMs to QA pairs before continued pre-training on documents could benefit the encoding process due to the straightforwardness of QA pairs in contrast to the complexity of documents. Read more.

- Transformers combined with graph neural networks for reasoning: A proposed hybrid architecture combines the language understanding of Transformers with the robustness of GNN-based neural algorithmic reasoners, aimed at addressing the fragility of Transformers in algorithmic reasoning tasks. For more details, see the paper.

- Pixels-as-tokens in VISION Transformers: A paper presented the novel concept of operating directly on individual pixels using vanilla Transformers with randomly initialized, learnable per-pixel position encodings. The discussion highlighted the unconventional approach and its potential impacts. Link.

- PowerInfer-2 boosts LLM inference on smartphones: The newly introduced framework, PowerInfer-2, significantly accelerates inference for large language models on smartphones, leveraging heterogeneous resources for fine-grained neuron cluster computations. Evaluation shows up to a 29.2x speed increase compared to state-of-the-art frameworks. Full details.

Interconnects (Nathan Lambert)

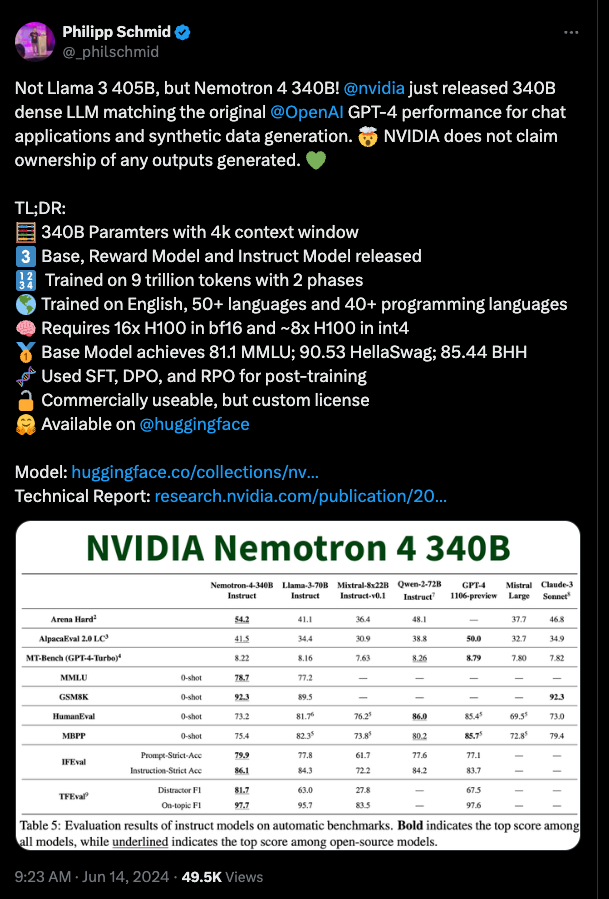

NVIDIA unveils 340B model

- The Nemotron-4-340B-Base is a large language model with 340 billion parameters, supporting a context length of 4,096 tokens for synthetic data generation. It has extensive training on 9 trillion tokens across multiple languages.

- Synthetic data model with permissive license: Included in the release is a synthetic data permissive license, although concerns were raised about hosting a PDF of the license.

- Experimental Claude Steering API access: Claude has an experimental Steering API available for users to steer internal features. Sign-ups are open for limited access for research purposes only.

- Sakana AI secures billion-dollar valuation: Japanese startup Sakana AI, developing alternatives to transformer models, raised funds from NEA, Lux, and Khosla, achieving a $1B valuation. More details are available in the provided link.

Links mentioned:

- Tweet from Stephanie Palazzolo (@steph_palazzolo): Details investment in Sakana AI

- nvidia/Nemotron-4-340B-Base · Hugging Face: No description found

- Tweet from Alex Albert (@alexalbert__): Provides access to Claude's experimental Steering API

AI News Subscriptions and Social Media

To stay updated with the latest AI news, readers can subscribe to the AI News newsletter by entering their email in the subscription form. Additionally, readers can connect with AI News on Twitter and through the latent.space website. The footer also provides links to AI News on social media platforms, highlighting the Twitter account and the Newsletter. AI News is brought to readers by Buttondown, a platform that simplifies starting and growing newsletters.

FAQ

Q: What is the significance of NVIDIA's Nemotron-4-340B model upgrade?

A: The Nemotron-4-340B model is an upgrade from Nemotron-4 15B and is highlighted for its synthetic data generation process, relying heavily on synthetic data with over 98% coming from a generated pipeline.

Q: What is the alignment process discussed in relation to Nemotron-4-340B?

A: The alignment process involves using human-annotated data and showcasing the success of synthetic data in various prompts within the Nemotron-4-340B model.

Q: What are some comparisons made to other models like Mixtral and Llama 3 in the discussion?

A: The section mentions comparisons to other models like Mixtral and Llama 3, along with the release of a Reward Model version, highlighting the advancements in NVIDIA's Nemotron models.

Q: What is highlighted in the AI Twitter Recap subsection?

A: The AI Twitter Recap covers various Twitter recaps about AI models and architectures, such as Mamba-2-Hybrid and Samba, providing insights into the latest discussions and developments in the AI community.

Q: What discussions and developments are covered in the Discord recap related to AI?

A: The Discord recap covers discussions on processor comparisons, model performance, CUDA anomalies, software compatibility, creation of image bot tools, anatomy accuracy in models, GPU compatibility, challenges, and advancements in AI hardware like wafer-scale chips.

Q: What is the Cohere Chat Interface commended for?

A: Cohere's playground chat interface was commended for its user experience, particularly citing a citation feature that displays a modal with text and source links upon clicking inline citations.

Q: Where can developers find open source tools for chat interface development?

A: Developers are directed to the cohere-toolkit on GitHub as a resource for creating chat interfaces with citation capabilities, and the crafting-effective-prompts documentation for effective prompting.

Q: What were some of the topics discussed in the AI community related to AI and machine learning technologies?

A: Topics discussed in the AI community include troubleshooting RAG chain outputs, crafting JSON objects, integrating LangChain with pgvector, NVIDIA's Nemotron models, collaborative problem-solving, advancements in AI training tools, PostgreSQL extensions, model performance, architecture limitations, and multilingual AI development.

Q: What is highlighted in the section discussing Eleuther's research?

A: The research section highlights discussions on KANs simplifying implementation on unconventional hardware, training large language models to encode questions first, combining Transformers with graph neural networks for reasoning, operating on individual pixels in VISION Transformers, and boosting LLM inference on smartphones with PowerInfer-2.

Q: What are some key features of NVIDIA's Nemotron-4-340B model unveiling?

A: The Nemotron-4-340B model boasts 340 billion parameters, supports a context length of 4,096 tokens for synthetic data generation, has training on 9 trillion tokens across multiple languages, includes a synthetic data permissive license, and offers experimental access to Claude Steering API for research purposes.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!