[AINews] Liquid Foundation Models: A New Transformers alternative + AINews Pod 2 • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

Improvements and Discussions in Various AI Discord Channels

>: Confusion Around Long Explanations in Docstrings

Aider Tools and Features

Computer Vision

LM Studio Hardware Discussion

Metal Shading Language Specification & M3 Device Performance

Nous Research AI Chat Highlights

Various AI Discussions

Comparing Different Models in AI Conversations

Conversations on OpenAI, Transparency, and Research Standards

Discussion on Lightweight Chat Models and California AI Training Bill

Client Connection Issues, Ngrok Error, and Open Interpreter Demo

AI Twitter Recap

AI Model Updates and Developments

- Meta AI announced Llama 3.2 including multimodal models and vision capabilities. Google introduced Gemini AI models and OpenAI enhanced Advanced Voice Mode.

Open Source and Regulation

- California Governor vetoed SB-1047 related to AI regulation, seen as a win for open-source AI. Open source in AI is thriving with projects on Github and HuggingFace reaching 1 million models.

AI Research and Development

- Google upgraded NotebookLM/Audio Overviews supporting YouTube videos. Meta AI chatbot is now multimodal, capable of image recognition. Study shows o1-preview model outperforms GPT-4 in medical scenarios.

Industry Trends and Collaborations

- James Cameron joins Stability AI board. EA unveils AI concept for user-generated video game content.

AI Discord Recap

Continuing with the updates from various Discord channels focused on AI, here is a recap of the latest discussions and developments:

-

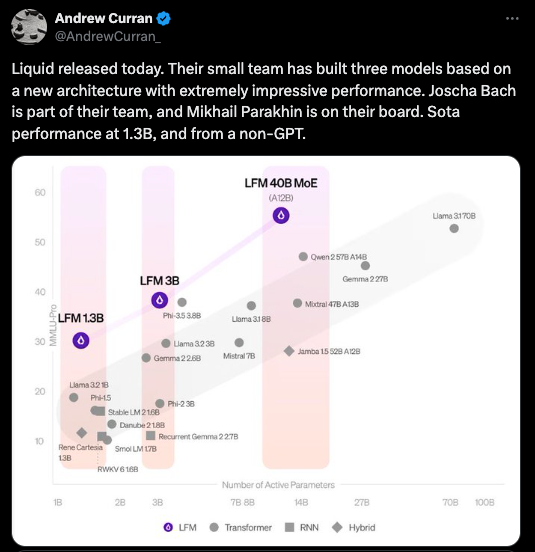

Theme 1: AI Models Make Waves with New Releases and Upgrades: LiquidAI launched Liquid Foundation Models (LFMs) featuring 1B, 3B, and 40B models, aiming for superior performance. Aider v0.58.0 introduced exciting enhancements like model pairing and autonomous code creation. Microsoft's Hallucination Detection Model upgraded to Phi-3.5, showcasing improved metrics.

-

Theme 2: AI Regulations and Legal Battles Heat Up: Governor Gavin Newsom vetoed the AI Safety Bill in California, sparking debates on regulation. OpenAI faced talent exodus over compensation demands, while LAION won a copyright case in Germany, setting a beneficial precedent for AI research.

-

Theme 3: Community Grapples with AI Tool Challenges: Users reported challenges with Perplexity, OpenRouter, and Gradio, highlighting issues with inconsistent performance, rate limits, and user experience. Discussions also unfolded on OpenAI's research transparency and GPU performance comparisons.

-

Theme 4: Hardware Woes Plague AI Enthusiasts: Discussions centered around NVIDIA's Jetson AGX Thor, driver performance issues, and considerations regarding AMD GPUs, reflecting concerns and preferences around hardware upgrades and compatibility.

-

Theme 5: AI Expands into Creative and Health Domains: NotebookLM introduced a podcast feature, while progress in schizophrenia treatment and debates on AI-generated art versus human creativity sparked discussions on the expanding domains of AI impact and development.

Improvements and Discussions in Various AI Discord Channels

The AI community is actively engaging in discussions and improvements across different Discord channels. Users are exploring new AI advancements such as Felo's superior academic search features and Perplexity AI's breakthrough in schizophrenia treatment. There are concerns about API inconsistencies and suggestions for parameter adjustments to enhance performance. Additionally, innovative AI applications in Texas counties are being showcased, while discussions in other channels focus on areas like AI art, translation models, chat GUI solutions, and search functionality within different AI models. Each channel presents a unique set of challenges and opportunities for AI enthusiasts to collaborate and innovate.

>: Confusion Around Long Explanations in Docstrings

Confusion arose regarding the use of long explanations in docstrings, impacting the accuracy in class signatures. Members highlighted the importance of clear documentation, but there was a need for clarification on the language model being utilized. Various discussions were held on different platforms like OpenInterpreter Discord, LAION Discord, LangChain AI Discord, MLOps @Chipro Discord, and more. These discussions covered topics like AI execution instructions, decoding packet errors, ngrok authentication troubles, using Jan AI as a computer control interface, French audio datasets request, copyright victory by LAION, synergies between visual language and latent diffusion models, and more.

Aider Tools and Features

The Aider v0.58.0 release introduces various exciting features including model pairing, new model support, session enhancements, and clipboard command updates. Users can now optimize coding efficiency by employing a strong reasoning model like o1-preview as their Architect alongside a faster model like gpt-4o as their Editor. The update also provides support for the new Gemini 002 models and enhanced functionality for Qwen 2.5 models, expanding the range of tools available for different applications. Session enhancements in Aider allow users to skip confirmation questions and use autocomplete for /read-only more efficiently. Additionally, the new commands /copy and /paste streamline workflow by enabling users to copy the last LLM response and follow HTTP redirects when scraping URLs for improved data retrieval.

Computer Vision

OmDet-Turbo Model Launch:

- The team announced the addition of support for the OmDet-Turbo model, enhancing zero-shot object detection capabilities in real-time, inspired by Grounding DINO and OWLv2 via RT-DETR. This significant update aims to improve AI performances in various object detection tasks.

Keypoint Detection Task Page Released:

- A new keypoint-detection task page has been introduced, now featuring support for SuperPoint, pivotal for interest point detection and description. Detailed information can be found in their documentation here. SuperPoint showcases a self-supervised training framework that is applicable to homography estimation and image matching.

Community Eager for More Models:

- Community interest is growing in keypoint detection as users express excitement for future model integrations like LoFTR, LightGlue, and OmniGlue. The anticipation highlights the community's engagement and expectation for advancements in this area of computer vision.

Fine-tuning TroCR Models Discussion:

- A user raised a question regarding whether to fine-tune with their dataset using 'trocr-large-stage1' (base) or 'trocr-large-handwriting' (already fine-tuned on the IAM dataset. They inquired if fine-tuning a fine-tuned model yields better performance.

LM Studio Hardware Discussion

The LM Studio Hardware Discussion section covers various topics related to hardware, including the NVIDIA Jetson AGX Thor boasting 128GB VRAM, comparisons between GPUs like 3090, 3090 Ti, and P40, market pricing dynamics, renting GPUs for AI workloads, and challenges with NVIDIA drivers on Linux. Members discussed potential upgrades with the NVIDIA Jetson AGX Thor, differences in performance and pricing of GPUs, alternatives for high VRAM like the A6000, renting GPUs from platforms like Runpod and Vast, and difficulties with NVIDIA drivers especially regarding VRAM offloading. The community also highlighted challenges and considerations in the GPU hardware space such as market trends, performance metrics, and optimizing AI workloads.

Metal Shading Language Specification & M3 Device Performance

For anyone working with Metal, the Metal Shading Language Specification is highly recommended as a foundational resource. Users are experiencing challenges with the M3 device, expressing that certain features and resources are still catching up. One user mentioned their excitement was dampened while trying to use it to train on-device specialists, as it struggled to handle queries correctly.

Nous Research AI Chat Highlights

In this section, members actively engage in discussions related to AI projects and advancements. They explore topics such as specific anti-spam tools, AutoMod features, Triton project collaboration, GPU modes, Modular community meetings, and more. From enthusiasm for challenging tasks to discussions on memory management complexity, the community showcases a proactive stance towards leveraging available tools for AI research and development. The section also delves into AI ethics, quantum computing in data generation, and the release of a VPTQ quantization algorithm. These diverse conversations highlight the community's interest in cutting-edge AI technologies and their potential applications in various fields.

Various AI Discussions

This section covers a range of discussions and updates related to AI technologies and advancements. It includes insights into the speculation around achieving Artificial General Intelligence (AGI) and the role of financial resources in development. The Perplexity AI channel discusses performance issues, API inconsistencies, and the comparison between Felo and Perplexity. Perplexity AI also highlights the Multiverse, the Israel-Hezbollah conflict, new AI design tools, Texas county AI applications, and the launch of the first schizophrenia medication in 30 years. The section on Eleuther features new members joining the conversation, coordination for upcoming AI conferences, Liquid AI's Foundation Models, concerns about Dengue fever in Singapore, and exploration of open-source LLM development. Lastly, OpenAI discussions cover topics such as Aider's code editing capabilities, regulations in the EU AI Bill, Meta's video translation feature, using AI for writing projects, and Huawei ChatGPT accessibility.

Comparing Different Models in AI Conversations

This section delves into various discussions and inquiries related to different models and approaches in the field of AI. It starts by clarifying the difference between Process Reward Models (PRMs) and value functions in reinforcement learning, emphasizing their impact on decision-making processes. The conversation then moves to the potential benefits of using PRMs for enhancing data efficiency and training stability compared to value functions. A member proposes an innovative approach of using a 1-bit BitNet with sparsity masks for faster and ternary performance in Large Language Models (LLMs). Additionally, there is interest in collaboration among individuals working on swarm LLM architecture, highlighting the ongoing exploration of novel strategies in LLM development. Another intriguing proposal involves simplifying physics simulations by utilizing translation, rotation, and volume equivariant representations of objects, aiming for more intuitive and efficient simulations. The conversations and inquiries reflect a continuous interest in exploring new methods and models to advance the field of AI.

Conversations on OpenAI, Transparency, and Research Standards

The section discusses various topics related to OpenAI, transparency, and research standards in the AI community:

-

PearAI Controversy: PearAI accused of code theft from Continue.dev, sparking ethical concerns.

-

LeCun on Research Standards: Yann LeCun criticizes reliance on blog posts over peer-reviewed papers for research validity, highlighting the tension between product pressure and research integrity.

-

Debate Over OpenAI's Transparency: Critics question OpenAI's transparency, emphasizing that referencing a blog does not substitute for substantive communication of research findings.

-

Skepticism Over Peer Review: Some express skepticism toward peer review processes in research publications.

-

Impacts of OpenAI's Research Blog: Discussions on OpenAI's research blog and the adequacy of information sharing like Cost of Transport insights, with mixed feelings on addressing transparency concerns sufficiently.

Discussion on Lightweight Chat Models and California AI Training Bill

The OpenAccess AI Collective community engaged in discussions regarding lightweight chat models to comply with a new California law mandating disclosure of AI training sources. Members explored the use of lightweight chat models to create 'inspired by' datasets for legal compliance. Additionally, they delved into the potential of finetuning these models to clean up messy webcrawl data. The community also showed excitement around Liquid AI, a new foundation model, and its implications in light of recent legislative changes.

Client Connection Issues, Ngrok Error, and Open Interpreter Demo

- Client connection troubles: A user facing issues with connecting mentioned their phone is stuck on the 'Starting...' page

- A member suggested posting setup details in a designated channel for help.

- Ngrok authentication problem: A member frustrated with an ngrok error sought assistance on a verified account and authtoken issue

- Speculated about the apikey not loading from the .env file

- Demo of Open Interpreter usage: A member shared a YouTube video demonstrating flashing 01's using Open Interpreter based software

- Video provides visuals on software capabilities

Links mentioned: ERR_NGROK_4018 | ngrok documentation, Human Devices 01 Flashing Demo

FAQ

Q: What are some recent AI model updates and developments mentioned in the essay?

A: Meta AI announced Llama 3.2 with multimodal models and vision capabilities, Google introduced Gemini AI models, OpenAI enhanced Advanced Voice Mode, and LiquidAI launched Liquid Foundation Models (LFMs) featuring 1B, 3B, and 40B models.

Q: What was the recent regulatory development related to AI mentioned in the essay?

A: The California Governor vetoed SB-1047 related to AI regulation, which was seen as a win for open-source AI. Additionally, the AI community discussed topics surrounding regulations, talent exodus at OpenAI, and a copyright victory by LAION in Germany.

Q: What advancements were made in AI research and development according to the content provided?

A: Google upgraded NotebookLM/Audio Overviews, Meta AI's chatbot became multimodal with image recognition capabilities, and a study showed o1-preview model outperforms GPT-4 in medical scenarios.

Q: What themes emerged in the AI community discussions around hardware and industry trends?

A: Discussions revolved around hardware challenges with NVIDIA's Jetson AGX Thor, GPU comparisons like 3090, and industry trends such as James Cameron joining Stability AI board and EA unveiling an AI concept for user-generated video game content.

Q: What are some key topics discussed in the section related to AI tool challenges and advancements?

A: Topics included challenges with tools like Perplexity, OpenRouter, and Gradio, as well as discussions on API inconsistencies, GPU performance, and enhancements in AI applications for different domains like health and creative fields.

Q: What models and approaches were discussed in the AI community related to AI projects and advancements?

A: Discussions covered topics such as Process Reward Models (PRMs) versus value functions in reinforcement learning, using 1-bit BitNet with sparsity masks for LLMs, and collaborations on swarm LLM architecture to advance the field of AI.

Q: What topics were raised in the OpenAI transparency and research standards discussions in the essay?

A: The discussions covered controversies around PearAI's code theft, Yann LeCun's critique on research standards, OpenAI's transparency criticisms, skepticism towards peer review processes, and impacts of OpenAI's research blog on research communication.

Q: What were some recent community inquiries and discussions related to AI technologies and advancements?

A: Community discussions ranged from lightweight chat models for legal compliance to hardware challenges with NVIDIA Jetson AGX Thor, with conversations also touching upon topics like AGI speculation, financial resources in AI development, and AI ethics.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!