[AINews] DataComp-LM: the best open-data 7B model/benchmark/dataset • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap: GPT4O (gpt-4o-2024-05-13)

Performance and Optimization in AI Models

Highlights and Updates

amaIndex

Unsloth AI Updates

HuggingFace Cool Finds

HuggingFace Discussions

Nous Research AI Outages and Backlash

Mojo Debugging and Debugging Setup

LM Studio and CUDA Mode Discussions

CUDA Mode Discussions

Interconnects: ML Drama

LlamaIndex Features and Updates

Torchtune Dev

LangChain AI - General

Discussion on Data Privacy and Target Audience Clarification

AI Twitter Recap

Recap of AI-related tweets discussing the release of GPT-4o mini by OpenAI and Mistral NeMo 12B by NVIDIA and Mistral. Notable points include:<br>- GPT-4o mini capabilities and pricing comparisons<br>- Mistral NeMo 12B features and licensing information<br>- Benchmarks and performance comparison for both models<br>These tweets provide insights into the advancements in AI models and their applications in various domains.

AI Discord Recap: GPT4O (gpt-4o-2024-05-13)

LLM Advancements:

- Llama 3 release imminent: There are rumors about the upcoming release of Llama 3 with 400 billion parameters in 4 days, generating excitement and speculation.

- GPT-4o mini offers cost-efficient performance: GPT-4o mini is viewed as a more affordable and faster alternative to 3.5 Turbo, being around 2x faster and 60% cheaper, as mentioned on GitHub. However, it lacks image support.

Performance and Optimization in AI Models

The section highlights various AI models' performances and optimizations. It discusses how DeepSeek-V2-Chat-0628 ranks No. 1 in LMSYS Leaderboard, outperforming JAX on CPUs. It also mentions open-source initiatives like SciPhi's Triplex for knowledge graphs and Open WebUI's extensive features. Additionally, the advancement in multimodal AI innovations such as Text2Control and Snowflake Arctic Embed 1.5 are covered. Furthermore, it touches on the positive reception of AI Community Tools like ComfyUI and explores experiments on self-awareness in GPTs agents.

Highlights and Updates

This section provides a variety of highlights and updates from different Discord channels. It includes discussions on Mojo's performance in comparison to JAX, Async IO API standards in Mojo, the latest features introduced in Mojo's nightly update, Mistral Nvidia collaboration, and new model releases from MistralAI and OpenAI. Additionally, it covers topics such as the performance of GPT-4o mini, security flaws in OpenAI, Gemma 2's logit capping, and the introduction of new models like HQQ+ 2-bit Llama3-8B-Instruct. The content also touches on community discussions around models like Gemma 2, Sara Hooker's critique of the US AI Act, and the usage of Z-loss regularization in objective functions. Lastly, it includes insights on Re-ranking for enhancing response relevance and challenges faced by users with context windows in different language models.

amaIndex

A member inquired about parsing unstructured data like video and music into formats digestible by LLMs, highlighting practical applications and potential use cases for ETL in AI data processing.

Unsloth AI Updates

Mistral-Nemo Model Updates

Discussions included details on Mistral-Nemo model architecture with links to relevant resources. Parameters adjustment for computational efficiency was highlighted.

Support for Mistral-Nemo Model by Unsloth

Unsloth officially announced support for Mistral-Nemo model, addressing initial hurdles and dynamic RoPE allocation. The community expressed excitement over the support and efficiency of Unsloth's features.

Lean Startup: Unsloth Team Insights

The community learned that Unsloth is run by two brothers efficiently managing various aspects. Humorous and supportive interactions were shared among members.

Exploring AI Model Alternatives

Discussions revolved around easier access to AI models using alternative platforms like Jan AI and OobaGooba in Colab. The importance of user-friendly interfaces for running models was emphasized.

Future Features and Releases

Unsloth announced upcoming releases including support for vision models and improved model inference and training interfaces. Community participation for feedback and testing was encouraged.

HuggingFace Cool Finds

Circuits Thread Explores Neural Networks:

The Circuits thread offers an experimental format collecting short articles and critical commentary delving into the inner workings of neural networks. It includes components like Curve Detectors, Pose-Invariant Dog Head Detectors, and Polysemantic Neurons.

Surge of Model Releases on a Single Thursday:

In a single day, significant model releases occurred: DeepSeek's top open-access lmsys model, Mistral 12B, Snowflake's embedding model, HF's Docmatix dataset, GoldFinch hybrid model, Arcee-Nova, and Mixedbread+deepset embeddings. Osanseviero remarked, '🌊For those of you overwhelmed by today's releases.' Link to tweet.

Noteworthy AI Papers Recently Spotted:

Highlights include ColPali for document retrieval with vision language models (paper), Scaling Agents Across Simulated Worlds (paper), and Chameleon

HuggingFace Discussions

This section discusses various topics related to HuggingFace, including training with Llama architecture, testing MathStral for math specialization, explaining AI Comic Factory's story dialogue enhancement, the release of OpenAI GPT4o Mini, and the proof-of-concept launch of the Isari platform for task processing. Additionally, it covers discussions on ML model layer optimization, paper club promotions, event planning, camera calibration using Transformers, Object Detection App in Java, image segmentation for road detection, and the RAG concept for chatbots.

Nous Research AI Outages and Backlash

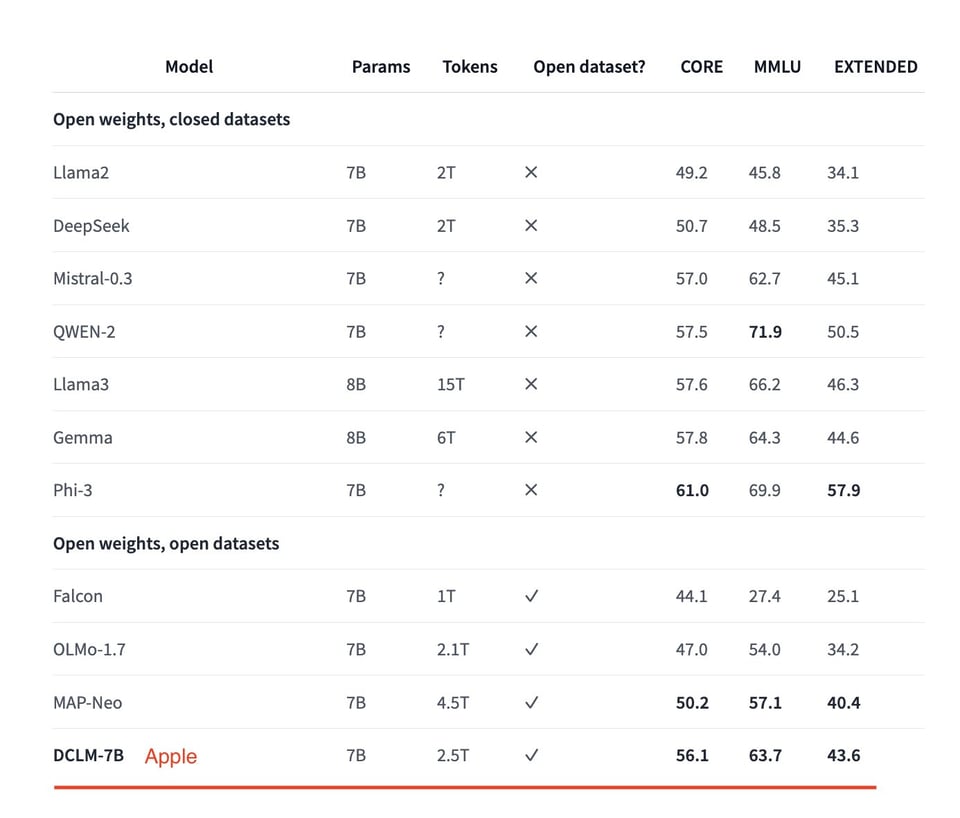

The CrowdStrike company faced backlash for causing global tech infrastructure outages, with users questioning the net positive impact. Despite defending efforts against ransomware attacks, some acknowledged significant damage. Additionally, the release of Apple's DCLM-7B model sparked debates on its capabilities, particularly its context length. The Mistral-Nemo-Instruct-2407 model outperformed peers, featuring a 128k context window and multilingual/code data. DeepSeek's quantization results showed promise for CPU inference, ranking #7 globally. The section also touches on various AI model comparisons and performance in different benchmark tests.

Mojo Debugging and Debugging Setup

Mojo improves debugging tools:

Mojo and MAX prioritize advanced debugging tools over traditional Python, C++, and CUDA stacks, improving the debugging experience especially for machine learning tasks by extending to GPUs.

- 'The goal is to show how simple and powerful debugging is with Mojo' says a developer in the channel.

Simplify debugging setup in Mojo:

Setting up Mojo debugging in VS Code is facilitated by the Mojo extension and can be adapted to other editors using LLDB-DAP. Future enhancements will allow stepping through CPU code into GPU calls seamlessly.

- 'It's aimed at general debugging if you have no experience with it, but goes over all the currently implemented Mojo features.'

LM Studio and CUDA Mode Discussions

This section discusses various topics related to LM Studio and GPU CUDA Mode. It covers issues like model compatibility with different versions, support for BPE pre-tokenization, hardware discussions, and predictions about AI chip supply. Additionally, it delves into the release of new models, open-sourcing of DCLM 7B model by Apple, and Snowflake Arctic Embed updates. The CUDA Mode conversations include discussions on the open-sourcing of Nvidia kernel modules, US Anti-Trust Laws influence, and benefits of open-sourcing for compatibility and maintenance. Overall, the section provides insights into LM Studio developments and GPU-related discussions in the community.

CUDA Mode Discussions

CUDA MODE ▷ #beginner (3 messages):

- Nsight Compute allows profile export: A suggestion was made to export the captured profile to a file, which can then be opened from the Nsight Compute GUI.

- Nsight Compute CLI User Guide detailed: The User Guide for Nsight Compute CLI provides comprehensive instructions, including a section on launching target applications and migrating from nvprof.

- The guide covers using the command line profiler to print results directly to the command line or store them in a report file.

- Nsight Compute opens ncu-rep files: Nsight Compute can open

ncu-repfiles, providing flexibility for users to analyze the results.

CUDA MODE ▷ #torchao (7 messages):

- FSDP2 set to Replace FSDP: It was mentioned that FSDP2 will replace FSDP, and using FSDP2 is required going forward, with nf4 as an example implementation.

- Low-bit Optimizer and FSDP2 Compatibility: A conversation with the FSDP2 author clarified that low-bit optimizers don’t need to handle FSDP logic as FSDP still provides fp32 sharded parameters.

- The low-bit optimizer can treat these parameters as inputs without worrying about forward/backward FSDP logic.

- DTensor and Custom Subclass Integration: Issues were discussed regarding integrating tensor subclass with DTensor, including creating a DTensor for a subclass using functions like

distribute_tensor().- Handling gather and scatter operations for DTensor in low-bit optimizers was raised as a significant challenge.

- 1-bit Adam for Communication Overhead Reduction: The potential of 1-bit Adam to reduce communication overhead by quantizing gradients was mentioned.

- Its complexity and difference from the current low-bit optimization approach were acknowledged.

- Experience with DTensor Support for Low-Bit Optimizer: One member shared their experience on DTensor support and the importance of the order of wrapping and composability with FSDP2.

- The member noted that implementing these features introduced several operational challenges beyond those faced with simpler tensor operations.

Interconnects: ML Drama

Two messages were shared in the Interconnects ML Drama channel. The first message delves into the challenges posed by the AGI mission, suggesting that current business efforts are seen as a sideline to achieving AGI. This indicates possible difficulties in aligning business goals with the central objective of AGI. The second message reinforces the idea that business efforts are considered a sideline in the pursuit of AGI, highlighting that while the business aspect is present, it is not the primary focus.

LlamaIndex Features and Updates

MistralAI and OpenAI Release New Models:

- Mistral NeMo, a new model from MistralAI, outperforms its predecessor 7b model with enhanced features.

LlamaCloud Introduces New Features:

- LlamaCloud now includes LlamaCloud Chat and new team collaboration features to improve user experience.

Boosting Relevance with Re-ranking:

- Re-ranking retrieved results, especially with @postgresml, enhances response relevance, offering valuable insights.

Featuring RAG Evaluation with McDermott:

- A session featuring Yixin Hu and Thomas Hulard explores using LLMs as judges for bringing applications into production.

Sign Up for Upcoming Events:

- Reminder to sign up for community events and stay tuned for future updates.

Torchtune Dev

Testing LLMs: Forced HAHAHA Response:

Members discussed attempts to train an LLM to respond with 'HAHAHA' to every input. Despite adjusting settings, the LLM did not learn as expected.

Visibility of Torchtune Recipes:

There was a conversation about improving the visibility and documentation of Torchtune's available recipes. Autogenerating docs from recipe docstrings was proposed as a useful step forward.

Unified Dataset Abstraction RFC:

A new RFC was discussed that aims to unify instruct and chat datasets to support multimodal data. Key feedback included usability improvements such as separating tokenizer and prompt templating from other dataset configurations, as detailed in the RFC.

Streamlining Error Handling:

There was a suggestion to streamline error handling in recipes by moving common validation functions out of individual recipes. This would help focus user attention on key configurations and reduce boilerplate code.

LangChain AI - General

prince.dhankhar: How Can We Send Timestamps To Each Chat Message to ChatOllama using LangChain?

Model-specific wording for prompts unnecessary:

One member questioned if specific wording from model descriptions is needed in ChatPromptTemplate for accuracy.

- Another member clarified that LangChain's

ChatPromptTemplateabstracts this, making specific markers like<|assistant|>unnecessary.

Using ChatPromptTemplate for prompt creation:

An example was shared on how to create ChatPromptTemplate by defining an array of messages, with each message represented by a role and message text pair.

Links mentioned:

- Issues · langchain-ai/langchain?utm_source=ainews&utm_medium=email&utm_campaign=ainews-apple-dclm-7b-the-best-new-open-weights

- [Conceptual guide | 🦜️🔗 Langchain](https://js.langchain.com/v0.2/docs/concepts/?utm_source=ainews&utm_medium=email&utm_campaign=ainews-apple-dclm-7b-the-best-new-open-weights#chatprompttemplates>

- Build a Simple LLM Application with LCEL | 🦜️🔗 Langchain

Discussion on Data Privacy and Target Audience Clarification

- Sensitive Data Concerns: Many businesses are hesitant to share sensitive data with third parties due to data privacy and security concerns.

- Businesses Prioritize Data Privacy: There is an increasing caution among businesses when sharing sensitive information externally.

- Defining Target Audience for Communication: Discussions around understanding the target audience for effective communication, tailored messages for engineers, aspiring engineers, product managers, devrels, and solution architects.

- Importance of Targeted Communication: Clarifying the target audience ensures relevant and impactful communication for specific groups.

FAQ

Q: What are the notable AI model releases discussed in the essai?

A: The essai mentions releases like GPT-4o mini by OpenAI, Mistral NeMo 12B by NVIDIA and MistralAI, Llama 3, and various others like Mistral 12B, Snowflake's embedding model, and HF's Docmatix dataset.

Q: What are the key features of GPT-4o mini and Mistral NeMo 12B models?

A: GPT-4o mini is noted for its cost-efficient performance and being 2x faster and 60% cheaper than its predecessor, but lacks image support. Mistral NeMo 12B features advanced architecture, parameters adjustment for computational efficiency, and support from Unsloth.

Q: What advancements are discussed in the AI models' performances and optimizations?

A: The essai covers topics like DeepSeek-V2-Chat-0628 ranking first in LMSYS Leaderboard, open-source initiatives like SciPhi's Triplex and Open WebUI, and advancements in multimodal AI innovations such as Text2Control and Snowflake Arctic Embed 1.5.

Q: What are some of the discussions surrounding AI model comparisons and performance?

A: Discussions included the positive reception of AI Community Tools like ComfyUI, experiments on self-awareness in GPT agents, comparisons of Mojo's performance with JAX, Mistral Nvidia collaboration, and insights on new model releases like HQQ+ 2-bit Llama3-8B-Instruct.

Q: What updates are provided on Mistral-Nemo Model, LlamaCloud, Re-ranking, and RAG Evaluation with McDermott?

A: The update includes details on Mistral-Nemo Model architecture, LlamaCloud's new features like Chat and team collaboration, the importance of re-ranking for enhancing response relevance, and a session on using LLMs as judges for applications by McDermott.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!