[AINews] Claude 3.5 Sonnet (New) gets Computer Use • ButtondownTwitterTwitter

Chapters

Computer Use and API Updates

AI Discord Recap

Exciting AI Developments in Various Discord Communities

Stripping Shows Major Size Reduction

Claude 3.5 Sonnet and OpenRouter Developments

Aider (Paul Gauthier) General

Discussions on Unsloth AI and Troubleshooting

Innovative AI Applications and User Experiences

AI-Driven Fact-Checking Strategies and Interpretability Advancements

Project Popcorn: LLM for Efficient Kernels

OpenAI and Cohere Discussions

Exploring Various Topics in Tech Communities

LangChain AI & OpenAccess AI Collective (axolotl) Discussions

Continuation of Content

Computer Use and API Updates

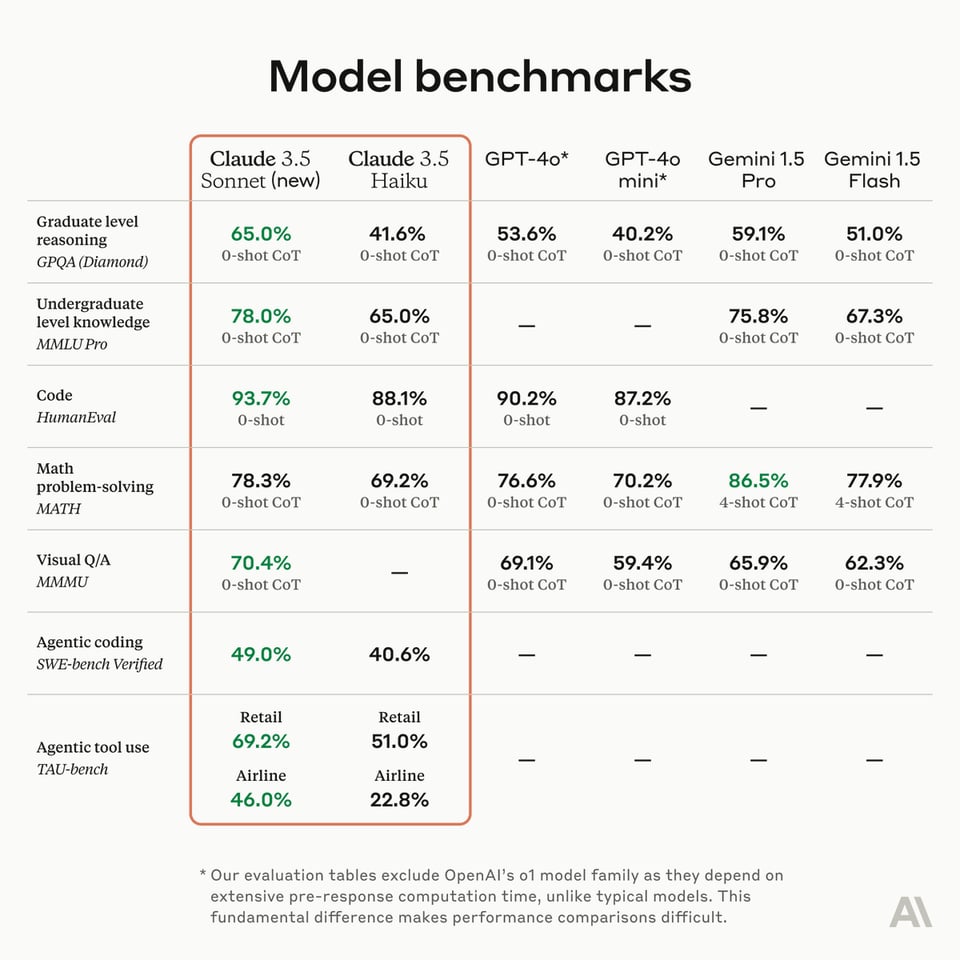

This section discusses Anthropic's new releases in the AI field, particularly the introduction of the Claude 3.5 Sonnet and Haiku models which have shown significant improvements in coding tasks. It also highlights the Computer Use API, pointing to OSWorld for relevant screen manipulation benchmarks. Further, the section reviews how Claude performed in various tasks, such as editing code and refactoring, showcasing its advancements. The content includes example videos, notable benchmarks, and endorsements from industry experts like Simon Willison. Lastly, the text references the sponsored content from Zep and opens a discussion around agent memory storage changes post the introduction of the computer use functionality.

AI Discord Recap

The AI Discord Recap section covers various highlights and discussions from different Discord channels related to AI developments and releases. Topics include the introduction of new AI models like Claude 3.5 Sonnet, Ministral 8b, and Grok Beta, along with their impressive benchmarks and capabilities. Users are excited about the performance boosts and pricing options of these models. There are also discussions on AI DJ software, OCR solutions, webinar announcements, robotics advancements, and hackathons offering substantial prizes. Overall, the Discord channels provide a platform for users to share insights, seek advice, and engage in conversations about the latest trends in AI technology.

Exciting AI Developments in Various Discord Communities

The section highlights various advancements and discussions within Discord communities focused on AI. From the launch of new models like Claude 3.5 and Stable Diffusion 3.5 to the unveiling of innovative projects like Allegro and Embed 3, the AI landscape is abuzz with cutting-edge developments. Discussions range from model performance comparisons, updates on research breakthroughs, to challenges in fine-tuning models and handling latency issues. Community feedback, technical discussions, and collaborative initiatives underscore the dynamic and vibrant nature of these AI forums.

Stripping Shows Major Size Reduction

Stripping a 300KB binary can lead to an impressive reduction to just 80KB, indicating strong optimization possibilities. Members noted the significant drop as encouraging for future binary management strategies. Comptime Variables Cause Compile Errors: A user reported issues using comptime var outside a @parameter scope, triggering compile errors. Discussion highlighted that while alias allows compile-time declarations, achieving direct mutability remains complex. Node.js vs Mojo in BigInt Calculations: A comparison revealed that BigInt operations in Node.js took 40 seconds for calculations, suggesting Mojo might optimize this process better. Members pointed out that refining the arbitrary width integer library is key to enhancing performance benchmarks.

Claude 3.5 Sonnet and OpenRouter Developments

The section discusses the release of the new Claude 3.5 Sonnet model on OpenRouter, along with various features and discussions within the community. Users express excitement about the model's capabilities and recent improvements. New users inquire about obtaining and using API keys, while Anthropic introduces a 'Computer Use' feature, generating both interest and concerns about its application. Pricing discussions for models like Claude, upcoming releases like Haiku 3.5, and the usage of OpenRouter Playground are highlighted in this active community forum.

Aider (Paul Gauthier) General

Claude 3.5 Sonnet shows significant improvements:

The new Claude 3.5 Sonnet tops Aider's code editing leaderboard at 84.2%, and achieves 85.7% with DeepSeek in architect mode.

-

Many users are excited about the enhancements, particularly in coding tasks and the same pricing structure as previous models.

-

Using DeepSeek as an Editor Model: DeepSeek is favored for being much cheaper than Sonnet, costing $0.28 per 1M output tokens compared to Sonnet's $15.

-

Users report saving substantial amounts while using DeepSeek as an editor model, stating it performs adequately when paired with Sonnet.

-

Concerns about Token Costs: Discussions highlight that using Sonnet as an architect alongside DeepSeek for execution primarily shifts the expense to the output tokens instead of the planning tokens.

-

This created a debate over whether the token cost savings justify the slower performance of DeepSeek.

-

Model Performance and Local Usage: There are inquiries regarding the effectiveness of offline models and their potential in assisting Sonnet by providing parsing or error correction.

-

Users suggested experimenting with larger local models to enhance capabilities when integrated with Sonnet.

-

Audio Recording and Transcription: A question arose about whether audio recordings for transcription are submitted remotely or if there is offline support available.

-

This led to discussions about potential offline transcription capabilities using models like Whisper.

Discussions on Unsloth AI and Troubleshooting

Users in the community reported difficulties with installing Unsloth using a conda environment script, leading to suggestions to use WSL2 for a smoother installation process. The lack of Multi-GPU support in Unsloth was addressed, with users questioning the role of 'per_device_train_batch_size' under current limitations. Troubleshooting CUDA and library errors was highlighted, emphasizing the need to ensure CUDA stability and library compatibility. In another section, discussions at Nous Research AI delved into topics such as catastrophic forgetting in large language models, the Claude model enhancements from AnthropicAI, and inquiries about token as a service providers supporting Nous models.

Innovative AI Applications and User Experiences

This section explores various innovative applications of AI technology and shares user experiences within different AI communities. Users discuss the awareness of model configuration in ML Studio, including the impact of system RAM on VRAM simulation for model inference performance. The section also covers the release of new AI models like Claude 3.5 Sonnet and Mochi 1 for video generation, CrewAI's Series A funding, Stable Diffusion 3.5 models, and the Rust port of Outlines Library. Furthermore, it delves into engaging interactions within the Latent Space, Notebook LM Discord, and Perplexity AI communities, showcasing projects like podcast creation, video generation models, language learning, and AI-generated content. The users share feedback, challenges faced, and excitement for upcoming AI advancements.

AI-Driven Fact-Checking Strategies and Interpretability Advancements

The surge in market dynamics and economic factors related to AI is highlighted for potential investors. A dedicated collection on AI-driven fact-checking strategies, emphasizing the use of LLMs and ethical considerations, was shared by Perplexity. Critical aspects like source credibility and bias detection are discussed, offering insights into handling misinformation more effectively. Eleuther unveiled a new open-source SAE interpretation pipeline, showcasing advancements in evaluating explanation quality and generating feature explanations based on causal effects. The alignment of SAE features using the Hungarian algorithm revealed significant interpretability improvements, affirming the usefulness of SAE latents and encouraging further exploration. Collaboration opportunities and resources for SAE interpretation are available for interested collaborators. The development of advanced video generation models like Allegro and Stable Diffusion 3.5, along with interpretability advancements in LLMs, showcases the ongoing innovation in the AI domain.

Project Popcorn: LLM for Efficient Kernels

A member shared plans to create an LLM to generate efficient kernels by December 2024, targeting NeurIPS. The initiative aims to explain GPU workings to humans and LLMs, leverage large-scale sampling, and collect a global kernel dataset. Additionally, a Kernel Dataset Competition is planned to build a data flywheel for new tokens transparently on Discord. Simple prompt engineering and exploring HidetScript as a DSL for kernel programs were also discussed.

OpenAI and Cohere Discussions

The section discusses various conversations happening in the OpenAI and Cohere Discord channels. In OpenAI's #ai-discussions, topics range from AGI feasibility to issues with Quantum Computing Simulators and the challenges faced by ChatGPT in recognizing TV show details. Cohere's channels cover announcements like the release of 'Multimodal Embed 3' and insights on using the Embed API for image processing. Additionally, discussions in Modular (Mojo 🔥) and tinygrad (George Hotz) include ideas for enhancing performance and functionality in programming languages and models.

Exploring Various Topics in Tech Communities

In this section, we delve into diverse topics discussed in tech communities. Users explore tensor indexing techniques like using .where() function, Python compatibility with MuJoCo, gaining access to intermediate representation inspection, and developing custom compiler backends. Additionally, advancements in AI models like Hume AI voice assistant, Claude 3.5 Sonnet release, and Open Interpreter with Claude integration are shared. The Screenpipe tool, open-source monetization models, and a detailed breakdown of various discussions and announcements within these tech communities provide valuable insights. The LlamaIndex section highlights the VividNode app, creating serverless RAG apps, knowledge management for RFPs, and the Llama Impact Hackathon. Moreover, in the LLM Agents hackathon announcements, sponsors like OpenAI and GoogleAI are acknowledged for their support. The section involving DSPy discusses the creation of new versions, upgrading processes, and the general functionality of the current system.

LangChain AI & OpenAccess AI Collective (axolotl) Discussions

This section includes discussions from the LangChain AI and OpenAccess AI Collective (axolotl) channels on various topics related to AI development. Topics range from agent orchestration, PyTorch core issues, to concerns over nightly builds and Flash Attention warnings. Members also share their experiences with NumPy documentation improvements, transitioning to consulting roles, and exploring self-attention mechanisms in NLP. Additionally, announcements from Mozilla on AI access challenges and competition in the AI industry are highlighted, shedding light on the control of AI development and necessary changes for a fair ecosystem.

Continuation of Content

This section does not contain any detailed information. Please refer to the next chunk for the continuation of content.

FAQ

Q: What are the new AI models introduced by Anthropic, specifically in the Claude series?

A: Anthropic introduced the Claude 3.5 Sonnet and Haiku models, which have shown significant improvements in coding tasks.

Q: What is the significance of the Computer Use API mentioned in the essai?

A: The Computer Use API introduced by Anthropic is highlighted for relevant screen manipulation benchmarks on OSWorld.

Q: How did the Claude 3.5 Sonnet model perform in various tasks?

A: The Claude 3.5 Sonnet model topped Aider’s code editing leaderboard and achieved high percentages in architect mode, showcasing advancements in coding tasks.

Q: What are some of the discussions around the pricing and performance of the DeepSeek model compared to Sonnet?

A: Users have discussed the cost benefits of using DeepSeek as an editor model due to its cheaper output token cost compared to Sonnet, sparking debates on performance trade-offs.

Q: What are the user inquiries regarding offline models and their potential integration with Sonnet?

A: Users have inquired about the effectiveness of offline models in assisting Sonnet and potentially enhancing capabilities such as parsing and error correction.

Q: What challenges and discussions have been highlighted in the tech community regarding token costs and model performance?

A: Challenges and discussions in the tech community revolve around how token costs impact the performance of models like Claude, especially when used in combination with editor models like DeepSeek.

Q: What are some of the reported difficulties by users in the community regarding model installations?

A: Users in the community have reported difficulties in installing models like Unsloth using Conda environment scripts, leading to suggestions of using WSL2 for smoother installation processes.

Q: What are the innovative applications of AI technology discussed in the essai?

A: The essai explores various innovative applications of AI technology, including new models for video generation, funding announcements like CrewAI's Series A, and engaging interactions within AI communities.

Q: What topics are covered in the discussions within tech communities related to AI development?

A: Discussions within tech communities cover topics such as tensor indexing techniques, Python compatibility, advancements in AI models like Claude 3.5 Sonnet, and open-source monetization models, providing valuable insights.

Q: What are some of the key highlights in the AI market dynamics discussed in the essai?

A: The essai highlights the surge in market dynamics related to AI, discussions on AI-driven fact-checking strategies using LLMs, and advancements in interpretability in AI models like SAE and LLMs.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!